Posts Tagged ‘The Atlantic’

Back to the Future

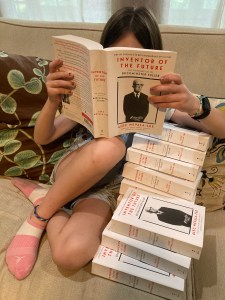

It’s hard to believe, but the paperback edition of Inventor of the Future: The Visionary Life of Buckminster Fuller is finally in stores today. As far as I’m concerned, this is the definitive version of this biography—it incorporates a number of small fixes and revisions—and it marks the culmination of a journey that started more than five years ago. It also feels like a milestone in an eventful writing year that has included a couple of pieces in the New York Times Book Review, an interview with the historian Richard Rhodes on Christopher Nolan’s Oppenheimer for The Atlantic online, and the usual bits and bobs elsewhere. Most of all, I’ve been busy with my upcoming biography of the Nobel Prize-winning physicist Luis W. Alvarez, which is scheduled to be published by W.W. Norton sometime in 2025. (Oppenheimer fans with sharp eyes and good memories will recall Alvarez as the youthful scientist in Lawrence’s lab, played by Alex Wolff, who shows Oppenheimer the news article announcing that the atom has been split. And believe me, he went on to do a hell of a lot more. I can’t wait to tell you about it.)

Updike’s ladder

Note: I’m taking the day off, so I’m republishing a post that originally appeared, in a slightly different form, on September 13, 2017.

Last year, the author Anjali Enjeti published an article in The Atlantic titled “Why I’m Still Trying to Get a Book Deal After Ten Years.” If just reading those words makes your palms sweat and puts your heart through a few sympathy palpitations, congratulations—you’re a writer. No matter where you might be in your career, or what length of time you mentally insert into that headline, you can probably relate to what Enjeti writes:

Ten years ago, while sitting at my computer in my sparsely furnished office, I sent my first email to a literary agent. The message included a query letter—a brief synopsis describing the personal-essay collection I’d been working on for the past six years, as well as a short bio about myself. As my third child kicked from inside my pregnant belly, I fantasized about what would come next: a request from the agent to see my book proposal, followed by a dream phone call offering me representation. If all went well, I’d be on my way to becoming a published author by the time my oldest child started first grade.

“Things didn’t go as planned,” Enjeti says dryly, noting that after landing and leaving two agents, she’s been left with six unpublished manuscripts and little else to show for it. She goes on to share the stories of other writers in the same situation, including Michael Bourne of Poets & Writers, who accurately calls the submission process “a slow mauling of my psyche.” And Enjeti wonders: “So after sixteen years of writing books and ten years of failing to find a publisher, why do I keep trying? I ask myself this every day.”

It’s a good question. As it happens, I first encountered her article while reading the authoritative biography Updike by Adam Begley, which chronicles a literary career that amounts to the exact opposite of the ones described above. Begley’s account of John Updike’s first acceptance from The New Yorker—just months after his graduation from Harvard—is like lifestyle porn for writers:

He never forgot the moment when he retrieved the envelope from the mailbox at the end of the drive, the same mailbox that had yielded so many rejection slips, both his and his mother’s: “I felt, standing and reading the good news in the midsummer pink dusk of the stony road beside a field of waving weeds, born as a professional writer.” To extend the metaphor…the actual labor was brief and painless: he passed from unpublished college student to valued contributor in less than two months.

If you’re a writer of any kind, you’re probably biting your hand right now. And I haven’t even gotten to what happened to Updike shortly afterward:

A letter from Katharine White [of The New Yorker] dated September 15, 1954 and addressed to “John H. Updike, General Delivery, Oxford,” proposed that he sign a “first-reading agreement,” a scheme devised for the “most valued and most constant contributors.” Up to this point, he had only one story accepted, along with some light verse. White acknowledged that it was “rather unusual” for the magazine to make this kind of offer to a contributor “of such short standing,” but she and Maxwell and Shawn took into consideration the volume of his submissions…and their overall quality and suitability, and decided that this clever, hard-working young man showed exceptional promise.

Updike was twenty-two years old. Even now, more than half a century later and with his early promise more than fulfilled, it’s hard to read this account without hating him a little. Norman Mailer—whose debut novel, The Naked and the Dead, appeared when he was twenty-five—didn’t pull any punches in “Some Children of the Goddess,” an essay on his contemporaries that was published in Esquire in 1963: “[Updike’s] reputation has traveled in convoy up the Avenue of the Establishment, The New York Times Book Review, blowing sirens like a motorcycle caravan, the professional muse of The New Yorker sitting in the Cadillac, membership cards to the right Fellowships in his pocket.” Even Begley, his biographer, acknowledges the singular nature of his subject’s rise:

It’s worth pausing here to marvel at the unrelieved smoothness of his professional path…Among the other twentieth-century American writers who made a splash before their thirtieth birthday…none piled up accomplishments in as orderly a fashion as Updike, or with as little fuss…This frictionless success has sometimes been held against him. His vast oeuvre materialized with suspiciously little visible effort. Where there’s no struggle, can there be real art? The Romantic notion of the tortured poet has left us with a mild prejudice against the idea of art produced in a calm, rational, workmanlike manner (as he put it, “on a healthy basis of regularity and avoidance of strain”), but that’s precisely how Updike got his start.

Begley doesn’t mention that the phrase “regularity and avoidance of strain” is actually meant to evoke the act of defecation, but even this provides us with an odd picture of writerly contentment. As Dick Hallorann says in The Shining, the best movie about writing ever made: “You got to keep regular, if you want to be happy.”

If there’s a larger theme here, it’s that the sheer productivity and variety of Updike’s career—with its reliable production of uniform hardcover editions over the course of five decades—are inseparable from the “orderly” circumstances of his rise. Updike never lacked a prestigious venue for his talents, which allowed him to focus on being prolific. Writers whose publication history remains volatile and unpredictable, even after they’ve seen print, don’t always have the luxury of being so unruffled, and it can affect their work in ways that are almost subliminal. (A writer can’t survive ten years of chasing after a book deal without spending the entire time convinced that he or she is on the verge of a breakthrough, anticipating an ending that never comes, which may partially account for the prevalence in literary fiction of frustration and unresolved narratives. It also explains why it helps to be privileged enough to fail for years.) The short answer to Begley’s question is that struggle is good for a writer, but so is success, and you take what you can get, even as you’re transformed by it. I think on a monthly basis of what Nicholson Baker writes of Updike in his tribute U and I:

I compared my awkward public self-promotion too with a documentary about Updike that I saw in 1983, I believe, on public TV, in which, in one scene, as the camera follows his climb up a ladder at his mother’s house to put up or take down some storm windows, in the midst of this tricky physical act, he tosses down to us some startlingly lucid little felicity, something about “These small yearly duties which blah blah blah,” and I was stunned to recognize that in Updike we were dealing with a man so naturally verbal that he could write his fucking memoirs on a ladder!

We’re all on that ladder, including Enjeti, who I’m pleased to note finally scored her book deal—she has an essay collection in the works from the University of Georgia Press. Some are on their way up, some are headed down, and some are stuck for years on the same rung. But you never get anywhere if you don’t try to climb.

The end of flexibility

A few days ago, I picked up my old paperback copy of Steps to an Ecology of Mind, which collects the major papers of the anthropologist and cyberneticist Gregory Bateson. I’ve been browsing through this dense little volume since I was in my teens, but I’ve never managed to work through it all from beginning to end, and I turned to it recently out of a vague instinct that it was somehow what I needed. (Among other things, I’m hoping to put together a collection of my short stories, and I’m starting to see that many of Bateson’s ideas are relevant to the themes that I’ve explored as a science fiction writer.) I owe my introduction to his work, as with so many other authors, to Stewart Brand of The Whole Earth Catalog, who advised in one edition:

[Bateson] wandered thornily in and out of various disciplines—biology, ethnology, linguistics, epistemology, psychotherapy—and left each of them altered with his passage. Steps to an Ecology of Mind chronicles that journey…In recommending the book I’ve learned to suggest that it be read backwards. Read the broad analyses of mind and ecology at the end of the book and then work back to see where the premises come from.

This always seemed reasonable to me, so when I returned to it last week, I flipped immediately to the final paper, “Ecology and Flexibility in Urban Civilization,” which was first presented in 1970. I must have read it at some point—I’ve quoted from it several times on this blog before—but as I looked over it again, I found that it suddenly seemed remarkably urgent. As I had suspected, it was exactly what I needed to read right now. And its message is far from reassuring.

Bateson’s central point, which seems hard to deny, revolves around the concept of flexibility, or “uncommitted potentiality for change,” which he identifies as a fundamental quality of any healthy civilization. In order to survive, a society has to be able to evolve in response to changing conditions, to the point of rethinking even its most basic values and assumptions. Bateson proposes that any kind of planning for the future include a budget for flexibility itself, which is what enables the system to change in response to pressures that can’t be anticipated in advance. He uses the analogy of an acrobat who moves his arms between different positions of temporary instability in order to remain on the wire, and he notes that a viable civilization organizes itself in ways that allow it to draw on such reserves of flexibility when needed. (One of his prescriptions, incidentally, serves as a powerful argument for diversity as a positive good in its own right: “There shall be diversity in the civilization, not only to accommodate the genetic and experiential diversity of persons, but also to provide the flexibility and ‘preadaptation’ necessary for unpredictable change.”) The trouble is that a system tends to eat up its own flexibility whenever a single variable becomes inflexible, or “uptight,” compared to the rest:

Because the variables are interlinked, to be uptight in respect to one variable commonly means that other variables cannot be changed without pushing the uptight variable. The loss of flexibility spreads throughout the system. In extreme cases, the system will only accept those changes which change the tolerance limits for the uptight variable. For example, an overpopulated society looks for those changes (increased food, new roads, more houses, etc.) which will make the pathological and pathogenic conditions of overpopulation more comfortable. But these ad hoc changes are precisely those which in longer time can lead to more fundamental ecological pathology.

When I consider these lines now, it’s hard for me not to feel deeply unsettled. Writing in the early seventies, Bateson saw overpopulation as the most dangerous source of stress in the global system, and these days, we’re more likely to speak of global warming, resource depletion, and income inequality. Change a few phrases here and there, however, and the situation seems largely the same: “The pathologies of our time may broadly be said to be the accumulated results of this process—the eating up of flexibility in response to stresses of one sort or another…and the refusal to bear with those byproducts of stress…which are the age-old correctives.” Bateson observes, crucially, that the inflexible variables don’t need to be fundamental in themselves—they just need to resist change long enough to become a habit. Once we find it impossible to imagine life without fossil fuels, for example, we become willing to condone all kinds of other disruptions to keep that one hard-programmed variable in place. A civilization naturally tends to expand into any available pocket of flexibility, blowing through the budget that it should have been holding in reserve. The result is a society structured along lines that are manifestly rigid, irrational, indefensible, and seemingly unchangeable. As Bateson puts it grimly:

Civilizations have risen and fallen. A new technology for the exploitation of nature or a new technique for the exploitation of other men permits the rise of a civilization. But each civilization, as it reaches the limits of what can be exploited in that particular way, must eventually fall. The new invention gives elbow room or flexibility, but the using up of that flexibility is death.

And it’s difficult for me to read this today without thinking of all the aspects of our present predicament—political, environmental, social, and economic. Since Bateson sounded his warning half a century ago, we’ve consumed our entire budget of flexibility, largely in response to a single hard-programmed variable that undermined all the other factors that it was meant to sustain. At its best, the free market can be the best imaginable mechanism for ensuring flexibility, by allocating resources more efficiently than any system of central planning ever could. (As one prominent politician recently said to The Atlantic: “I love competition. I want to see every start-up business, everybody who’s got a good idea, have a chance to get in the market and try…Really what excites me about markets is competition. I want to make sure we’ve got a set of rules that lets everybody who’s got a good, competitive idea get in the game.” It was Elizabeth Warren.) When capital is concentrated beyond reason, however, and solely for its own sake, it becomes a weapon that can be used to freeze other cultural variables into place, no matter how much pain it causes. As the anonymous opinion writer indicated in the New York Times last week, it will tolerate a president who demeans the very idea of democracy itself, as long as it gets “effective deregulation, historic tax reform, a more robust military and more,” because it no longer sees any other alternative. And this is where it gets us. For most of my life, I was ready to defend capitalism as the best system available, as long as its worst excesses were kept in check by measures that Bateson dismissively describes as “legally slapping the wrists of encroaching authority.” I know now that these norms were far more fragile than I wanted to acknowledge, and it may be too late to recover. Bateson writes: “Either man is too clever, in which case we are doomed, or he was not clever enough to limit his greed to courses which would not destroy the ongoing total system. I prefer the second hypothesis.” And I do, too. But I no longer really believe it.

The beefeaters

Every now and then, I get the urge to write a blog post about Jordan Peterson, the Canadian professor of psychology who has unexpectedly emerged as a superstar pundit in the culture wars and the darling of young conservatives. I haven’t read any of Peterson’s books, and I’m not particularly interested in what I’ve seen of his ideas, so until now, I’ve managed to resist the temptation. What finally broke my resolve was a recent article in The Atlantic by James Hamblin, who describes how Peterson’s daughter Mikhailia has become an unlikely dietary guru by subsisting almost entirely on beef. As Hamblin writes:

[Mikhailia] Peterson described an adolescence that involved multiple debilitating medical diagnoses, beginning with juvenile rheumatoid arthritis…In fifth grade she was diagnosed with depression, and then later something called idiopathic hypersomnia (which translates to English as “sleeping too much, of unclear cause”—which translates further to sorry we really don’t know what’s going on). Everything the doctors tried failed, and she did everything they told her, she recounted to me. She fully bought into the system, taking large doses of strong immune-suppressing drugs like methotrexate…She started cutting out foods from her diet, and feeling better each time…Until, by December 2017, all that was left was “beef and salt and water,” and, she told me, “all my symptoms went into remission.”

As a result of her personal experiences, she began counseling people who were considering a similar diet, a role that she characterizes with what strikes me as an admirable degree of self-awareness: “They mostly want to see that I’m not dead.”

Mikhailia Peterson has also benefited from the public endorsement of her father, who has adopted the same diet, supposedly with positive results. According to Hamblin’s article:

In a July appearance on the comedian Joe Rogan’s podcast, Jordan Peterson explained how Mikhaila’s experience had convinced him to eliminate everything but meat and leafy greens from his diet, and that in the last two months he had gone full meat and eliminated vegetables. Since he changed his diet, his laundry list of maladies has disappeared, he told Rogan. His lifelong depression, anxiety, gastric reflux (and associated snoring), inability to wake up in the mornings, psoriasis, gingivitis, floaters in his right eye, numbness on the sides of his legs, problems with mood regulation—all of it is gone, and he attributes it to the diet…Peterson reiterated several times that he is not giving dietary advice, but said that many attendees of his recent speaking tour have come up to him and said the diet is working for them. The takeaway for listeners is that it worked for Peterson, and so it may work for them.

As Hamblin points out, not everyone agrees with this approach. He quotes Jack Gilbert, a professor of surgery at the University of Chicago: “Physiologically, it would just be an immensely bad idea. A terribly, terribly bad idea.” After rattling off a long list of the potential consequences, including metabolic dysfunction and cardiac problems, Gilbert concludes: “If [Mikhaila] does not die of colon cancer or some other severe cardiometabolic disease, the life—I can’t imagine.”

“There are few accounts of people having tried all-beef diets,” Hamblin writes, and it isn’t clear if he’s aware of one case study that was famous in its time. Buckminster Fuller—the futurist, architect, and designer best known for popularizing the geodesic dome—inspired a level of public devotion at his peak that can only be compared to Peterson’s, or even Elon Musk’s, and he ate little more than beef for the last two decades of his life. Fuller’s reasoning was slightly different, but the motivation and outcome were largely the same, as L. Steven Sieden relates in Buckminster Fuller’s Universe:

[Fuller] suddenly noticed his level of energy decreasing and decided to see if anything could be done about that change…Bucky resolved that since the majority of the energy utilized on earth originated from the sun, he should attempt to acquire the most highly concentrated solar energy available to him as food…He would be most effective if he ate more beef because cattle had eaten the vegetation and transformed the solar energy into a more protein-concentrated food. Hence, he began adhering to a regular diet of beef…His weight dropped back down to 140 pounds, identical to when he was in his early twenties. He also found that his energy level increased dramatically.

Fuller’s preferred cut was the relatively lean London broil, supplemented with salad, fruit, and Jell-O. As his friend Alden Hatch says in Buckminster Fuller: At Home in the Universe: “[London broil] is usually served in thin strips because it is so tough, but Bucky cheerfully chomps great hunks of it with his magnificent teeth.”

Unlike the Petersons, Fuller never seems to have inspired many others to follow his example. In fact, his diet, as Sieden tells it, “caused consternation among many of the holistic, health-oriented individuals to whom he regularly spoke during the balance of his life.” (It may also have troubled those who admired Fuller’s views on sustainability and conservation. As Hamblin notes: “Beef production at the scale required to feed billions of humans even at current levels of consumption is environmentally unsustainable.”) Yet the fact that we’re even talking about the diets of Jordan Peterson and Buckminster Fuller is revealing in itself. Hamblin ties it back to a desire for structure in times of uncertainty, which is precisely what Peterson holds out to his followers:

The allure of a strict code for eating—a way to divide the world into good foods and bad foods, angels and demons—may be especially strong at a time when order feels in short supply. Indeed there is at least some benefit to be had from any and all dietary advice, or rules for life, so long as a person believes in them, and so long as they provide a code that allows a person to feel good for having stuck with it and a cohort of like-minded adherents.

I’ve made a similar argument about diet here before. And Mikhailia Peterson’s example is also tangled up in fascinating ways with the difficulty that many women have in finding treatments for their chronic pain. But it also testifies to the extent to which charismatic public intellectuals like Fuller and Jordan Peterson, who have attained prominence in one particular subject, can insidiously become regarded as authority figures in matters far beyond their true area of expertise. There’s a lot that I admire about Fuller, and I don’t like much about Peterson, but in both cases, we should be just as cautious about taking their word on larger issues as we might when it comes to their diet. It’s more obvious with beef than it is with other aspects of our lives—but it shouldn’t be any easier to swallow.

A Hawk From a Handsaw, Part 3

Note: My article “The Campbell Machine,” which describes one of the strangest episodes in the history of Astounding Science Fiction, is now available online and in the July/August issue of Analog. To celebrate its publication, I’m republishing a series about an equally curious point of intersection between science fiction and the paranormal. This post combines two pieces that originally appeared, in substantially different form, on February 17 and December 6, 2017.

Last year, an excellent profile in The Atlantic by McKay Coppins attempted to answer a question that is both simpler and more complicated than it might initially seem—namely how a devout Christian like Mike Pence can justify hitching his career to the rise of a man whose life makes a mockery of the ideals that most evangelicals claim to value. You could cynically assume that Pence, like so many others, has coldly calculated that Trump’s support on a few key issues, like abortion, outweighs literally everything else that he could say or do, and you might be right. But Pence also seems to sincerely believe that he’s an instrument of divine will, a conviction that dates back at least to his successful campaign for the House of Representatives. Coppins writes:

By the time a congressional seat opened up ahead of the 2000 election, Pence was a minor Indiana celebrity and state Republicans were urging him to run. In the summer of 1999, as he was mulling the decision, he took his family on a trip to Colorado. One day while horseback riding in the mountains, he and Karen looked heavenward and saw two red-tailed hawks soaring over them. They took it as a sign, Karen recalled years later: Pence would run again, but this time there would be “no flapping.” He would glide to victory.

For obvious reasons, this anecdote caught my eye, but this version leaves out a number of details. As far as I can tell, it first appears in a profile that ran in Roll Call back in 2010. The article observes that Pence keeps a plaque on his desk that reads “No Flapping,” and it situates the incident, curiously, in Theodore Roosevelt National Park in North Dakota, not in Colorado:

“We were trying to make a decision as a family about whether to sell our house, move back home and make another run for Congress, and we saw these two red-tailed hawks coming up from the valley floor,” Pence says. He adds that the birds weren’t flapping their wings at all; instead, they were gliding through the air. As they watched the hawks, Pence’s wife told him she was onboard with a third run. “I said, ‘If we do it, we need to do it like those hawks. We just need to spread our wings and let God lift us up where he wants to take us,’” Pence remembers. “And my wife looked at me and said, ‘That’ll be how we do it, no flapping.’ So I keep that on my desk to remember every time my wings get sore, stop flapping.”

Neither article mentions it, but I’m reasonably sure that Pence was thinking of the verse in the Book of Job, which he undoubtedly knows well, that marks the only significant appearance of a hawk in the Bible: “Does the hawk fly by your wisdom, and stretch her wings toward the south?” As one scholarly commentary notes, with my italics added: “Aside from calling attention to the miraculous flight, this might refer to migration, or to the wonderful soaring exhibitions of these birds.”

So what does this have to do with the other hawks that I’ve been discussing here this week? In each case, it involves looking at the world—or at a work of literature or scripture—and extracting a meaning that can be applied to the present moment. It’s literally a form of augury, which originally referred to a form of divination based on the flight of birds. In my handy Eleventh Edition of the Encyclopedia Britannica, we read of its use in Rome:

The natural region to look to for signs of the will of Jupiter was the sky, where lightning and the flight of birds seemed directed by him as counsel to men. The latter, however, was the more difficult of interpretation, and upon it, therefore, mainly hinged the system of divination with which the augurs were occupied…[This included] signs from birds (signa ex avibus), with reference to the direction of their flight, and also to their singing, or uttering other sounds. To the first class, called alites, belonged the eagle and the vulture; to the second, called oscines, the owl, the crow and the raven. The mere appearance of certain birds indicated good or ill luck, while others had a reference only to definite persons or events. In matters of ordinary life on which divine counsel was prayed for, it was usual to have recourse to this form of divination.

In reality, as the risk consultant John C. Hulsman has recently observed of the Priestess of Apollo at Delphi, the augurs were meant to provide justification or counsel on matters of policy. As Cicero, who was an augur himself, wrote in De Divinatione: “I think that, although in the beginning augural law was established from a belief in divination, yet later it was maintained and preserved from considerations of political expediency.”

The flight or appearance of birds in the sky amounts to a source of statistically random noise, and it’s just as useful for divination as similar expedients are today for cryptography. And you don’t even need to look at the sky to get the noise that you need. As I’ve noted here before, you can draw whatever conclusion you like from a sufficiently rich and varied corpus of facts. Sometimes, as in the case of the hawks that I’ve been tracking in science fiction, it’s little more than an amusing game, but it can also assume more troubling forms. In the social sciences, all too many mental models come down to looking for hawks, noting their occurrences, and publishing a paper about the result. And in politics, whether out of unscrupulousness or expediency, it can be easy to find omens that justify the actions that we’ve already decided to take. It’s easy to make fun of Mike Pence for drawing meaning from two hawks in North Dakota, but it’s really no stranger than trying to make a case for this administration’s policy of family separation by selectively citing the Bible. (Incidentally, Uri Geller, who is still around, predicted last year that Donald Trump would win the presidential election, based primarily on the fact that Trump’s name contains eleven letters. Geller has a lot to say about the number eleven, which, if you squint just right, looks a bit like two hawks perched side by side, their heads in profile.) When I think of Pence’s hawks, I’m reminded of the rest of that passage from Job: “Its young ones suck up blood; and where the slain are, there it is.” But I also recall the bird of prey in a poem that is quoted more these days than ever: “Turning and turning in the widening gyre / The falcon cannot hear the falconer.” And a few lines later, Yeats evokes the sphinx, like an Egyptian god, slouching toward Bethlehem, “moving its slow thighs, while all about it / Wind shadows of the indignant desert birds.”

Donate here to The Young Center for Immigrant Children’s Rights and The Raices Family Reunification Bond Fund.

The ghost story

Back in March, I published a post here about the unpleasant personal life of Saul Bellow, whose most recent biographer, Zachary Leader, has amply documented the novelist’s physical violence toward his second wife Sondra Tschacbasov. After Bellow discovered the affair between Tschacbasov and his good friend Jack Ludwig, however, he contemplated something even worse, as James Atlas relates in his earlier biography: “At the Quadrangle Club in Chicago a few days later, Bellow talked wildly of getting a gun.” And I was reminded of this passage while reading an even more horrifying account in D.T. Max’s biography of David Foster Wallace, Every Love Story is a Ghost Story, about the writer’s obsession with the poet and memoirist Mary Karr:

Wallace’s literary rebirth [in the proposal for Infinite Jest] did not coincide with any calming of his convention that he had to be with Karr. Indeed, the opposite. In fact, one day in February, he thought briefly of committing murder for her. He called an ex-con he knew through his recovery program and tried to buy a gun. He had decided he would wait no longer for Karr to leave her husband; he planned to shoot him instead when he came into Cambridge to pick up the family dog. The ex-con called Larson, the head of [the addiction treatment center] Granada House, who told Karr. Wallace himself never showed up for the handover and thus ended what he would later call in a letter of apology “one of the scariest days in my life.” He wrote Larson in explanation, “I now know what obsession can make people capable of”—then added in longhand after—“at least of wanting to do.” To Karr at the time he insisted that the whole episode was an invention of the ex-con and she believed him.

Even at a glance, there are significant differences between these incidents. Bellow had treated Tschacbasov unforgivably, but his threat to buy a gun was part of an outburst of rage at a betrayal by his wife and close friend, and there’s no evidence that he ever tried to act on it—the only visible outcome was an episode in Herzog. Wallace, by contrast, not only contemplated murdering a man whose wife he wanted for himself, but he took serious steps to carry it out, and when Karr heard about it, he lied to her. By any measure, it’s the more frightening story. Yet they do have one striking point in common, which is the fact that they don’t seem to have inspired much in the way of comment or discussion. I only know about the Wallace episode because of a statement by Karr from earlier this week, in which she expressed her support for the women speaking out against Junot Díaz and noted that the violence that she experienced from Wallace was described as “alleged” by D.T. Max and The New Yorker. In his biography, Max writes without comment: “One night Wallace tried to push Karr from a moving car. Soon afterward, he got so mad at her that he threw her coffee table at her.” When shown these lines by a sympathetic reader on Twitter, Karr responded that Wallace also kicked her, climbed up the side of her house, and followed her five-year-old son home from school, and that she had to change her phone number twice to avoid him. Max, she said, “ignored” much of it, even though she showed him letters in Wallace’s handwriting confessing to his behavior. (In his original article in The New Yorker, Max merely writes: “One day, according to Karr, [Wallace] broke her coffee table.” And it wasn’t until years later that he revealed that Wallace had “broken” the table by throwing it at her.)

There’s obviously a lot to discuss here, but for reasons of my own, I’d like to approach it from the perspective of a biographer. I’ve just finished writing a biography about four men who were terrible husbands, in their own ways, to one or more wives, and I’m also keenly aware of how what seems like an omission can be the result of unseen pressures operating elsewhere in—or outside—the book. Yet Max has done himself no favors. In an interview with The Atlantic that has been widely shared, he speaks of Wallace’s actions with an aesthetic detachment that comes off now as slightly chilling:

One thing his letters make you feel is that he thought the word was God, and words were always worth putting down. Even in a letter to the head of his halfway house—where he apologizes for contemplating buying a gun to kill the writer Mary Karr’s husband—the craftsmanship of that letter is quite remarkable. You read it like a David Foster Wallace essay…I didn’t know that David had that [violence] in him. I was surprised, in general, with the intensity of violence in his personality. It was something I knew about him when I wrote the New Yorker piece, but it grew on me. It made me think harder about David and creativity and anger. But on the other end of the spectrum, he was also this open, emotional guy, who was able to cry, who intensely loved his dogs. He was all those things. That, in part, is why he’s a really fascinating guy and an honor to write about.

Max tops it off by quoting a “joke” from a note by Wallace: “Infinite Jest was just a means to Mary Karr’s end.” He helpfully adds: “A sexual pun.”

It’s no wonder that Karr is so furious, but if anything, I’m more impressed by her restraint. Karr is absurdly overqualified to talk about problems of biography, and there are times when you can feel her holding herself back. In her recent book The Art of Memoir, she writes in a chapter titled “The Truth Contract Twixt Writer and Reader”:

Forget how inventing stuff breaks a contract with the reader, it fences the memoirist off from the deeper truths that only surface in draft five or ten or twenty. Yes, you can misinterpret—happens all the time. “The truth ambushes you,” Geoffrey Wolff once said…But unless you’re looking at actual lived experience, the more profound meanings will remain forever shrouded. You’ll never unearth the more complex truths, the ones that counter that convenient first take on the past. A memoirist forging false tales to support his more comfortable notions—or to pump himself up for the audience—never learns who he is. He’s missing the personal liberation that comes from the examined life.

Replace “memoirist” with “biographer,” and you’re left with a sense of what was lost when Max concluded that Wallace’s violence only made him “a really fascinating guy and an honor to write about.” I won’t understate the difficulty of coming to terms with the worst aspects of one’s subject, and even Karr herself writes: “I still try to err on the side of generosity toward any character.” But it feels very much like a reluctance to deal honestly with facts that didn’t fit into the received notions of Wallace’s “complexity.” It can be hard to confront those ghosts. But not every ghost story has to be a love story.

Quote of the Day

[Preschool teacher] Maureen Ingram…said her students often tell different stories about a given piece of art depending on the day, perhaps because they weren’t sure what they intended to draw when they started the picture. “We as adults will often say, ‘I’m going to draw a horse,’ and we set out…and get frustrated when we can’t do it,” Ingram said. “They seem to take a much more sane approach, where they just draw, and then they realize, ‘It is a horse.’”

Quote of the Day

If truth is a path, then science explores it, and the brief stops along the way are where technologies begin.

—Alfred Adler, in The Atlantic

The pursuit of trivia

Over the last few months, my wife and I have been obsessively playing HQ Trivia, an online game show that until recently was available only on Apple devices. If you somehow haven’t encountered it by now, it’s a live video broadcast, hosted by the weirdly ingratiating comedian Scott Rogowsky, in which players are given the chance to answer twelve multiple-choice questions. If you get one wrong, you’re eliminated, but if you make it to the end, you split the prize—which ranges from a few hundred to thousands of dollars—with the remaining contestants. Early on, my wife and I actually made it to the winner’s circle four times, earning a total of close to fifty bucks. (Unfortunately, the game’s payout minimum means that we currently have seventeen dollars that we can’t cash out until we’ve won again, which at this point seems highly unlikely.) That was back when the pool of contestants on a typical evening consisted of fewer than ten thousand players. Last night, there were well over a million, which set a new record. To put that number in perspective, that’s more than twice the number of people who watched the first airing of the return of Twin Peaks. It’s greater than the viewership of the average episode of Girls. In an era when many of us watch even sporting events, award ceremonies, or talk shows on a short delay, HQ Trivia obliges its viewers to pay close attention at the same time for ten minutes or more at a stretch. And we’re at a point where it feels like a real accomplishment to force any live audience, which is otherwise so balkanized and diffused, to focus on this tiny node of content.

Not surprisingly, the game has inspired a certain amount of curiosity about its ultimate intentions. It runs no advertisements of any kind, with a prize pool funded entirely by venture capital. But its plans aren’t exactly a mystery. As the reporter Todd Spangler writes in Variety:

So how do HQ Trivia’s creators plan to make money, instead of just giving it away? [Co-founder Rus] Yusupov said monetization is not currently the company’s focus. That said, it’s “getting a ton of interest from brands and agencies who want to collaborate and do something fun,” he added. “If we do any brand integrations or sponsors, the focus will be on making it enhance the gameplay,” Yusupov said. “For a user, the worst thing is feeling like, ‘I’m being optimized—I’m the product now.’ We want to make a great game, and make it grow and become something really special.”

It’s worth remembering that this game launched only this past August, and that we’re at a very early stage in its development, which has shrewdly focused on increasing its audience without any premature attempts at turning a profit. Startups are often criticized for focusing on metrics like “clicks” or “eyeballs” without showing how to turn them into revenue, but for HQ, it makes a certain amount of sense—these are literal eyeballs, all demonstrably turned to the same screen at once, and it yields the closest thing that anyone has seen in years to a captive audience. When the time comes for it to approach sponsors, it’s going to present a compelling case indeed.

But the specter of a million users glued simultaneously to their phones, hanging on Scott Rogowsky’s every word, fills some onlookers with uneasiness. Rogowsky himself has joked on the air about the comparisons to Black Mirror, and several commentators have taken it even further. Ian Bogost says in The Atlantic:

Why do I feel such dread when I play? It’s not the terror of losing, or even that of being embarrassed for answering questions wrong in front of my family and friends…It’s almost as if HQ is a fictional entertainment broadcast, like the kind created to broadcast the Hunger Games in the fictional nation of Panem. There, the motion graphics, the actors portraying news or talk-show hosts, the sets, the chyrons—they impose the grammar of television in order to recreate it, but they contort it in order to emphasize that it is also fictional…HQ bears the same sincere fakery, but seems utterly unaware that it is doing so.

And Miles Surrey of The Ringer envisions a dark future, over a century from now, in which playing the app is compulsory:

Scott—or “Trill Trebek,” or simply “God”—is a messianic figure to the HQties, the collective that blindly worships him, and a dictatorial figure to the rest of us…I made it to question 17. My children will eat today…You need to delete HQ from your phones. What appears to be an exciting convergence of television and app content is in truth the start of something terrifying, irreparable, and dangerous. You are conditioned to stop what you’re doing twice a day and play a trivia game—that is just Phase 1.

Yet I suspect that the real reason that this game feels so sinister to some observers is that it marks a return to a phenomenon that we thought we’d all left behind, and which troubled us subconsciously in ways that we’re only starting to grasp. It’s appointment television. In my time zone, the game airs around eight o’clock at night, which happens to be when I put my daughter to bed. I never know exactly how long the process will take—sometimes she falls asleep at once, but she tends to stall—so I usually get downstairs to join my wife about five or ten minutes later. By that point, the game has begun, and I often hear her say glumly: “I got out already.” And that’s it. It’s over until the same time tomorrow. Even if there were a way to rewind, there’s no point, because the money has already been distributed and nothing else especially interesting happened. (The one exception was the episode that aired on the day that one of the founders threatened to fire Rogowsky in retaliation for a profile in The Daily Beast, which marked one of the few times that the show’s mask seemed to crack.) But believe it or not, this is how we all used to watch television. We couldn’t record, pause, or control what was on, which is a fact that my daughter finds utterly inexplicable whenever we stay in a hotel room. It was a collective experience, but we also conducted it in relative isolation, except from the people who were in the same room as we were. That’s true of HQ as well, which moves at such a high speed that it’s impossible to comment on it on social media without getting thrown off your rhythm. These days, many of us only watch live television together at shared moments of national trauma, and HQ is pointedly the opposite. It’s trivial, but we have no choice but to watch it at the exact same time, with no chance of saving, pausing, or sharing. The screen might be smaller, but otherwise, it’s precisely what many of us did for decades. And if it bothers us now, it’s only because we’ve realized how dystopian it was all along.

The two hawks

I spent much of yesterday thinking about Mike Pence and a few Israeli hawks, although perhaps not the sort that first comes to mind. Many of you have probably seen the excellent profile by McKay Coppins that ran this week in The Atlantic, which attempts to answer a question that is both simpler and more complicated than it might initially seem—namely how a devout Christian like Pence can justify hitching his career to the rise of a man whose life makes a mockery of all the ideals that most evangelicals claim to value. You could cynically assume that Pence, like so many others, has coldly calculated that Trump’s support on a few key issues, like abortion, outweighs literally everything else that he could say or do, and you might well be right. But Pence also seems to sincerely believe that he’s an instrument of divine will, a conviction that dates back at least to his successful campaign for the House of Representatives. Coppins writes:

By the time a congressional seat opened up ahead of the 2000 election, Pence was a minor Indiana celebrity and state Republicans were urging him to run. In the summer of 1999, as he was mulling the decision, he took his family on a trip to Colorado. One day while horseback riding in the mountains, he and Karen looked heavenward and saw two red-tailed hawks soaring over them. They took it as a sign, Karen recalled years later: Pence would run again, but this time there would be “no flapping.” He would glide to victory.

This anecdote caught my eye for reasons that I’ll explain in a moment, but this version leaves out a number of details. As far as I can determine, it first appears in an article that ran in Roll Call back in 2010. It mentions that Pence keeps a plaque on his desk that reads “No Flapping,” and it places the original incident, curiously, in Theodore Roosevelt National Park in North Dakota, not in Colorado:

“We were trying to make a decision as a family about whether to sell our house, move back home and make another run for Congress, and we saw these two red-tailed hawks coming up from the valley floor,” Pence says. He adds that the birds weren’t flapping their wings at all; instead, they were gliding through the air. As they watched the hawks, Pence’s wife told him she was onboard with a third run. “I said, ‘If we do it, we need to do it like those hawks. We just need to spread our wings and let God lift us up where he wants to take us,’” Pence remembers. “And my wife looked at me and said, ‘That’ll be how we do it, no flapping.’ So I keep that on my desk to remember every time my wings get sore, stop flapping.”

Neither article mentions it, but I’m reasonably sure that Pence was thinking of the verse in the Book of Job, which he undoubtedly knows well, that marks the only significant appearance of a hawk in the Bible: “Does the hawk fly by your wisdom, and stretch her wings toward the south?” As one commentary notes, with my italics added: “Aside from calling attention to the miraculous flight, this might refer to migration, or to the wonderful soaring exhibitions of these birds.”

Faithful readers of this blog might recall that earlier this year, I spent three days tracing the movements of a few hawks in the life of another singular figure—the Israeli psychic Uri Geller. In the book Uri, which presents its subject as a messianic figure who draws his telekinetic and precognitive abilities from extraterrestrials, the parapsychological researcher Andrija Puharich recounts a trip to Tel Aviv, where he quickly became convinced of Geller’s powers. While driving through the countryside on New Year’s Day of 1972, Puharich saw two white hawks, followed by others at his hotel two days later:

At times one of the birds would glide in from the sea right up to within a few meters of the balcony; it would flutter there in one spot and stare at me directly in the eyes. It was a unique experience to look into the piercing, “intelligent” eyes of a hawk. It was then that I knew I was not looking into the eyes of an earthly hawk. This was confirmed about 2pm when Uri’s eyes followed a feather, loosened from the hawk, that floated on an updraft toward the top of the Sharon Tower. As his eye followed the feather to the sky, he was startled to see a dark spacecraft parked directly over the hotel.

Geller said that the birds, which he incorrectly claimed weren’t native to Israel, had been sent to protect them. “I dubbed this hawk ‘Horus’ and still use this name each time he appears to me,” Puharich concludes, adding that he saw it on two other occasions. And according to Robert Anton Wilson’s book Cosmic Trigger, the following year, the writer Saul-Paul Sirag was speaking to Geller during an LSD trip when he saw the other man’s head turn into that of a “bird of prey.”

In my original posts, I pointed out that these stories were particularly striking in light of contemporaneous events in the Middle East—much of the action revolves around Geller allegedly receiving information from a higher power about a pending invasion of Israel by Egypt, which took place two years later, and Horus was the Egyptian god of war. (Incidentally, Geller, who is still around, predicted last year that Donald Trump would win the presidential election, based primarily on the fact that Trump’s name contains eleven letters. Geller has a lot to say about the number eleven, which, if you squint just right, looks a bit like two hawks perched side by side, their heads in profile.) And it’s hard to read about Pence’s hawks now without thinking about recent developments in that part of the world. Trump’s policy toward Israel is openly founded on his promises to American evangelicals, many of whom are convinced that the Jews have a role to play in the end times. Pence himself tiptoes right up to the edge of saying this in an interview quoted by Coppins: “My support for Israel stems largely from my personal faith. In the Bible, God promises Abraham, ‘Those who bless you I will bless, and those who curse you I will curse.’” Which might be the most revealing statement of all. The verse that I mentioned earlier is uttered by God himself, who speaks out of the whirlwind with an accounting of his might, which is framed as a sufficient response to Job’s lamentations. You could read it, if you like, as an argument that power justifies suffering, which might be convincing when presented by the divine presence, but less so by men willing to distort their own beliefs beyond all recognition for the sake of their personal advantage. And here’s how the passage reads in full:

Does the hawk fly by your wisdom, and spread its wings toward the south? Does the eagle mount up at your command, and make its nest on high? On the rock it dwells and resides, on the crag of the rock and the stronghold. From there it spies out the prey; its eyes observe from afar. Its young ones suck up blood; and where the slain are, there it is.

The secret villain

Note: This post alludes to a plot point from Pixar’s Coco.

A few years ago, after Frozen was first released, The Atlantic ran an essay by Gina Dalfonzo complaining about the moment—fair warning for a spoiler—when Prince Hans was revealed to be the film’s true villain. Dalfonzo wrote:

That moment would have wrecked me if I’d seen it as a child, and the makers of Frozen couldn’t have picked a more surefire way to unsettle its young audience members…There is something uniquely horrifying about finding out that a person—even a fictional person—who’s won you over is, in fact, rotten to the core. And it’s that much more traumatizing when you’re six or seven years old. Children will, in their lifetimes, necessarily learn that not everyone who looks or seems trustworthy is trustworthy—but Frozen’s big twist is a needlessly upsetting way to teach that lesson.

Whatever you might think of her argument, it’s obvious that Disney didn’t buy it. In fact, the twist in question—in which a seemingly innocuous supporting character is exposed in the third act as the real bad guy—has appeared so monotonously in the studio’s recent movies that I was already complaining about it a year and a half ago. By my count, the films that fall back on his convention include not just Frozen, but Wreck-It Ralph, Zootopia, and now the excellent Coco, which implies that the formula is spilling over from its parent studio to Pixar. (To be fair, it goes at least as far back as Toy Story 2, but it didn’t become the equivalent of the house style until about six or seven years ago.)

This might seem like a small point of storytelling, but it interests me, both because we’ve been seeing it so often and because it’s very different from the stock Disney approach of the past, in which the lines between good and evil were clearly demarcated from the opening frame. In some ways, it’s a positive development—among other things, it means that characters are no longer defined primarily by their appearance—and it may just be a natural instance of a studio returning repeatedly to a trick that has worked in the past. But I can’t resist a more sinister reading. All of the examples that I’ve cited come from the period since John Lasseter took over as the chief creative officer of Disney Animation Studios, and as we’ve recently learned, he wasn’t entirely what he seemed, either. A Variety article recounts:

For more than twenty years, young women at Pixar Animation Studios have been warned about the behavior of John Lasseter, who just disclosed that he is taking a leave due to inappropriate conduct with women. The company’s cofounder is known as a hugger. Around Pixar’s Emeryville, California, offices, a hug from Lasseter is seen as a mark of approval. But among female employees, there has long been widespread discomfort about Lasseter’s hugs and about the other ways he showers attention on young women…“Just be warned, he likes to hug the pretty girls,” [a former employee] said she was told. “He might try to kiss you on the mouth.” The employee said she was alarmed by how routine the whole thing seemed. “There was kind of a big cult around John,” she says.

And a piece in The Hollywood Reporter adds: “Sources say some women at Pixar knew to turn their heads quickly when encountering him to avoid his kisses. Some used a move they called ‘the Lasseter’ to prevent their boss from putting his hands on their legs.”

Of all the horror stories that have emerged lately about sexual harassment by men in power, this is one of the hardest for me to read, and it raises troubling questions about the culture of a company that I’ve admired for a long time. (Among other things, it sheds a new light on the Pixar motto, as expressed by Andrew Stanton, that I’ve quoted here before: “We’re in this weird, hermetically sealed freakazoid place where everybody’s trying their best to do their best—and the films still suck for three out of the four years it takes to make them.” But it also goes without saying that it’s far easier to fail repeatedly on your way to success if you’re a white male who fits a certain profile. And these larger cultural issues evidently contributed to the departure from the studio of Rashida Jones and her writing partner.) It also makes me wonder a little about the movies themselves. After the news broke about Lasseter, there were comments online about his resemblance to Lotso in Toy Story 3, who announces jovially: “First thing you gotta know about me—I’m a hugger!” But the more I think about it, the more this seems like a bona fide inside joke about a situation that must have been widely acknowledged. As a recent article in Deadline reveals:

[Lasseter] attended some wrap parties with a handler to ensure he would not engage in inappropriate conduct with women, say two people with direct knowledge of the situation…Two sources recounted Lasseter’s obsession with the young character actresses portraying Disney’s Fairies, a product line built around the character of Tinker Bell. At the animator’s insistence, Disney flew the women to a New York event. One Pixar employee became the designated escort as Lasseter took the young women out drinking one night, and to a party the following evening. “He was inappropriate with the fairies,” said the former Pixar executive, referring to physical contact that included long hugs. “We had to have someone make sure he wasn’t alone with them.”

Whether or not the reference in Toy Story 3 was deliberate—the script is credited to Michael Arndt, based on a story by Lasseter, Stanton, and Lee Unkrich, and presumably with contributions from many other hands—it must have inspired a few uneasy smiles of recognition at Pixar. And its emphasis on seemingly benign figures who reveal an unexpected dark side, including Lotso himself, can easily be read as an expression, conscious or otherwise, of the tensions between Lasseter’s public image and his long history of misbehavior. (I’ve been thinking along similar lines about Kevin Spacey, whose “sheer meretriciousness” I identified a long time ago as one of his most appealing qualities as an actor, and of whom I once wrote here: “Spacey always seems to be impersonating someone else, and he does the best impersonation of a great actor that I’ve ever seen.” And it seems now that this calculated form of pretending amounted to a way of life.) Lasseter’s influence over Pixar and Disney is so profound that it doesn’t seem farfetched to see its films both as an expression of his internal divisions and of the reactions of those around him, and you don’t need to look far for parallel examples. My daughter, as it happens, knows exactly who Lasseter is—he’s the big guy in the Hawaiian shirt who appears at the beginning of all of her Hayao Miyazaki movies, talking about how much he loves the film that we’re about to see. I don’t doubt that he does. But not only do Miyazaki’s greatest films lack villains entirely, but the twist generally runs in the opposite direction, in which a character who initially seems forbidding or frightening is revealed to be kinder than you think. Simply on the level of storytelling, I know which version I prefer. Under Lasseter, Disney and Pixar have produced some of the best films of recent decades, but they also have their limits. And it only stands to reason that these limitations might have something to do with the man who was more responsible than anyone else for bringing these movies to life.

The notebook and the brain

A little over two decades ago, the philosophers Andy Clark and David Chalmers published a paper titled “The Extended Mind.” Its argument, which no one who encounters it is likely to forget, is that the human mind isn’t confined to the bounds of the skull, but includes many of the tools and external objects that we use to think, from grocery lists to Scrabble tiles. The authors present an extended thought experiment about a man named Otto who suffers from Alzheimer’s disease, which obliges him to rely on his notebook to remember how to get to a museum. They argue that this notebook is effectively occupying the role of Otto’s memory, but only because it meets a particular set of criteria:

First, the notebook is a constant in Otto’s life—in cases where the information in the notebook would be relevant, he will rarely take action without consulting it. Second, the information in the notebook is directly available without difficulty. Third, upon retrieving information from the notebook he automatically endorses it. Fourth, the information in the notebook has been consciously endorsed at some point in the past, and indeed is there as a consequence of this endorsement.

The authors conclude: “The information in Otto’s notebook, for example, is a central part of his identity as a cognitive agent. What this comes to is that Otto himself is best regarded as an extended system, a coupling of biological organism and external resources…Once the hegemony of skin and skull is usurped, we may be able to see ourselves more truly as creatures of the world.”

When we think and act, we become agents that are “spread into the world,” as Clark and Chalmers put it, and this extension is especially striking during the act of writing. In an article that appeared just last week in The Atlantic, “You Think With the World, Not Just Your Brain,” Sam Kriss neatly sums up the problem: “Language sits hazy in the world, a symbolic and intersubjective ether, but at the same time it forms the substance of our thought and the structure of our understanding. Isn’t language thinking for us?” He continues:

This is not, entirely, a new idea. Plato, in his Phaedrus, is hesitant or even afraid of writing, precisely because it’s a kind of artificial memory, a hypomnesis…Writing, for Plato, is a pharmakon, a “remedy” for forgetfulness, but if taken in too strong a dose it becomes a poison: A person no longer remembers things for themselves; it’s the text that remembers, with an unholy autonomy. The same criticisms are now commonly made of smartphones. Not much changes.

The difference, of course, is that our own writing implies the involvement of the self in the past, which is a dialogue that doesn’t exist when we’re simply checking information online. Clark and Chalmers, who wrote at a relatively early stage in the history of the Internet, are careful to make this distinction: “The Internet is likely to fail [the criteria] on multiple counts, unless I am unusually computer-reliant, facile with the technology, and trusting, but information in certain files on my computer may qualify.” So can the online content that we make ourselves—I’ve occasionally found myself checking this blog to remind myself what I think about something, and I’ve outsourced much of my memory to Google Photos.

I’ve often written here about the dialogue between our past, present, and future selves implicit in the act of writing, whether we’re composing a novel or jotting down a Post-It note. Kriss quotes Jacques Derrida on the humble grocery list: “At the very moment ‘I’ make a shopping list, I know that it will only be a list if it implies my absence, if it already detaches itself from me in order to function beyond my ‘present’ act and if it is utilizable at another time.” And I’m constantly aware of the book that I’m writing as a form of time travel. As I mentioned last week, I’m preparing the notes, which means that I often have to make sense of something that I wrote down over two years ago. There are times when the presence of that other self is so strong that it feels as if he’s seated next to me, even as I remain conscious of the gap between us. (For one thing, my past self didn’t know nearly as much about John W. Campbell.) And the two of us together are wiser, more effective, and more knowledgeable than either one of us alone, as long as we have writing to serve as a bridge between us. If a notebook is a place for organizing information that we can’t easily store in our heads, that’s even more true of a book written for publication, which serves as a repository of ideas to be manipulated, rearranged, and refined over time. This can lead to the odd impression that your book somehow knows more than you do, which it probably does. Knowledge is less about raw data than about the connections between them, and a book is the best way we have for compiling our moments of insight in a form that can be processed more or less all at once. We measure ourselves against the intelligence of authors in books, but we’re also comparing two fundamentally different things. Whatever ideas I have right now on any given subject probably aren’t as good as a compilation of everything that occurred to my comparably intelligent double over the course of two or three years.

This implies that most authors are useful not so much for their deeper insights as for their greater availability, which allows them to externalize their thoughts and manipulate them in the real world for longer and with more intensity than their readers can. (Campbell liked to remind his writers that the magazine’s subscribers were paying them to think on their behalf.) I often remember one of my favorite anecdotes about Isaac Asimov, which he shares in the collection Opus 100. He was asked to speak on the radio on nothing less than the human brain, on which he had just published a book. Asimov responded: “Heavens! I’m not a brain expert.” When the interviewer pointed out that he had just written an entire book on the subject, Asimov explained:

“Yes, but I studied up for the book and put in everything I could learn. I don’t know anything but the exact words in the book, and I don’t think I can remember all those in a pinch. After all,” I went on, a little aggrieved, “I’ve written books on dozens of subjects. You can’t expect me to be expert on all of them just because I’ve written books about them.”

Every author can relate to this, and there are times when “I don’t know anything but the exact words in the book” sums up my feelings about my own work. Asimov’s case is particularly fascinating because of the scale involved. By some measures, he was the most prolific author in American history, with over four hundred books to his credit, and even if we strip away the anthologies and other works that he used to pad the count, it’s still a huge amount of information. To what extent was Asimov coterminous with his books? The answer, I think, lies somewhere between “Entirely” and “Not at all,” and there was presumably more of Asimov in his memoirs than in An Easy Introduction to the Slide Rule. But he’s only an extreme version of a phenomenon that applies to every last one of us. When the radio interviewer asked incredulously if he was an expert on anything, Asimov responded: “I’m an expert on one thing. On sounding like an expert.” And that’s true of everyone. The notes that we take allow us to pose as experts in the area that matters the most—on the world around us, and even our own lives.

The men who sold the moonshot

When you ask Google whether we should build houses on the ocean, it gives you a bunch of results like these. If you ask Google X, the subsidiary within the company responsible for investigating “moonshot” projects like self-driving cars and space elevators, the answer that you get is rather different, as Derek Thompson reports in the cover story for this month’s issue of The Atlantic:

Like a think-tank panel with the instincts of an improv troupe, the group sprang into an interrogative frenzy. “What are the specific economic benefits of increasing housing supply?” the liquid-crystals guy asked. “Isn’t the real problem that transportation infrastructure is so expensive?” the balloon scientist said. “How sure are we that living in densely built cities makes us happier?” the extradimensional physicist wondered. Over the course of an hour, the conversation turned to the ergonomics of Tokyo’s high-speed trains and then to Americans’ cultural preference for suburbs. Members of the team discussed commonsense solutions to urban density, such as more money for transit, and eccentric ideas, such as acoustic technology to make apartments soundproof and self-driving housing units that could park on top of one another in a city center. At one point, teleportation enjoyed a brief hearing.

Thompson writes a little later: “I’d expected the team at X to sketch some floating houses on a whiteboard, or discuss ways to connect an ocean suburb to a city center, or just inform me that the idea was terrible. I was wrong. The table never once mentioned the words floating or ocean. My pitch merely inspired an inquiry into the purpose of housing and the shortfalls of U.S. infrastructure. It was my first lesson in radical creativity. Moonshots don’t begin with brainstorming clever answers. They start with the hard work of finding the right questions.”

I don’t know why Thompson decided to ask about “oceanic residences,” but I read this section of the article with particular interest, because about two years ago, I spent a month thinking about the subject intensively for my novella “The Proving Ground.” As I’ve described elsewhere, I knew early on in the process that it was going to be a story about the construction of a seastead in the Marshall Islands, which was pretty specific. There was plenty of background material available, ranging from general treatments of the idea in books like The Millennial Project by Marshall T. Savage—which had been sitting unread on my shelf for years—to detailed proposals for seasteads in the real world. The obvious source was The Seasteading Institute, a libertarian pipe dream funded by Peter Thiel that generated a lot of useful plans along the way, as long as you saw it as the legwork for a science fiction story, rather than as a project on which you were planning to actually spend fifty billion dollars. The difference between most of these proposals and the brainstorming session that Thompson describes is that they start with a floating city and then look for reasons to justify it. Seasteading is a solution in search of a problem. In other words, it’s science fiction, which often starts with a premise or setting that seems like it would lead to an exciting story and then searches for the necessary rationalizations. (The more invisible the process, the better.) And this can lead us to troubling places. As I’ve noted before, Thiel blames many of this country’s problems on “a failure of imagination,” and his nostalgia for vintage science fiction is rooted in a longing for the grand gestures that it embodied: the flying car, the seastead, the space colony. As he famously said six years ago to The New Yorker: “The anthology of the top twenty-five sci-fi stories in 1970 was, like, ‘Me and my friend the robot went for a walk on the moon,’ and in 2008 it was, like, ‘The galaxy is run by a fundamentalist Islamic confederacy, and there are people who are hunting planets and killing them for fun.'”

Google X isn’t immune to this tendency—Google Glass was, if anything, a solution in search of a problem—and some degree of science-fictional thinking is probably inherent to any such enterprise. In his article, Thompson doesn’t mention science fiction by name, but the whole division is clearly reminiscent of and inspired by the genre, down to the term “moonshot” and that mysterious letter at the end of its name. (Company lore claims that the “X” was chosen as “a purposeful placeholder,” but it’s hard not to think that it was motivated by the same impulse that gave us Dimension X, X Minus 1, Rocketship X-M, and even The X-Files.) In fact, an earlier article for The Atlantic looked at this connection in depth, and its conclusions weren’t altogether positive. Three years ago, in the same publication, Robinson Meyer quoted a passage from an article in Fast Company about the kinds of projects favored by Google X, but he drew a more ambivalent conclusion:

A lot of people might read that [description] and think: Wow, cool, Google is trying to make the future! But “science fiction” provides but a tiny porthole onto the vast strangeness of the future. When we imagine a “science fiction”-like future, I think we tend to picture completed worlds, flying cars, the shiny, floating towers of midcentury dreams. We tend, in other words, to imagine future technological systems as readymade, holistic products that people will choose to adopt, rather than as the assembled work of countless different actors, which they’ve always really been. The futurist Scott Smith calls these “flat-pack futures,” and they infect “science fictional” thinking.

He added: “I fear—especially when we talk about “science fiction”—that we miss the layeredness of the world, that many people worked to build it…Flying through space is awesome, but if technological advocates want not only to make their advances but to hold onto them, we have better learn the virtues of incrementalism.” (The contrast between Meyer’s skepticism and Thompson’s more positive take feels like a matter of access—it’s easier to criticize Google X’s assumptions when it’s being profiled by a rival magazine.)

But Meyer makes a good point, and science fiction’s mixed record at dealing with incrementalism is a natural consequence of its origins in popular fiction. A story demands a protagonist, which encourages writers to see scientific progress in terms of heroic figures. The early fiction of John W. Campbell returns monotonously to the same basic plot, in which a lone genius discovers atomic power and uses it to build a spaceship, drawing on the limitless resources of a wealthy and generous benefactor. As Isaac Asimov noted in his essay “Big, Big, Big”:

The thing about John Campbell is that he liked things big. He liked big men with big ideas working out big applications of their big theories. And he liked it fast. His big men built big weapons within days; weapons that were, moreover, without serious shortcomings, or at least, with no shortcomings that could not be corrected as follows: “Hmm, something’s wrong—oh, I see—of course.” Then, in two hours, something would be jerry-built to fix the jerry-built device.

This works well enough in pulp adventure, but after science fiction began to take itself seriously as prophecy, it fossilized into the notion that all problems can be approached as provinces of engineering and solved by geniuses working alone or in small groups. Elon Musk has been compared to Tony Stark, but he’s really the modern incarnation of a figure as old as The Skylark of Space, and the adulation that he still inspires shades into beliefs that are even less innocuous—like the idea that our politics should be entrusted to similarly big men. Writing of Google X’s Rapid Evaluation team, Thompson uses terms that would have made Campbell salivate: “You might say it’s Rapid Eval’s job to apply a kind of future-perfect analysis to every potential project: If this idea succeeds, what will have been the challenges? If it fails, what will have been the reasons?” Science fiction likes to believe that it’s better than average at this kind of forecasting. But it’s just as likely that it’s worse.

The monotonous periodicity of genius

Yesterday, I read a passage from the book Music and Life by the critic and poet W.J. Turner that has been on my mind ever since. He begins with a sentence from the historian Charles Sanford Terry, who says of Bach’s cantatas: “There are few phenomena in the record of art more extraordinary than this unflagging cataract of inspiration in which masterpiece followed masterpiece with the monotonous periodicity of a Sunday sermon.” Turner objects to this:

In my enthusiasm for Bach I swallowed this statement when I first met it, but if Dr. Terry will excuse the expression, it is arrant nonsense. Creative genius does not work in this way. Masterpieces are not produced with the monotonous periodicity of a Sunday sermon. In fact, if we stop to think we shall understand that this “monotonous periodicity ” was exactly what was wrong with a great deal of Bach’s music. Bach, through a combination of natural ability and quite unparalleled concentration on his art, had arrived at the point of being able to sit down at any minute of any day and compose what had all the superficial appearance of being a masterpiece. It is possible that even Bach himself did not know which was a masterpiece and which was not, and it is abundantly clear to me that in all his large-sized works there are huge chunks of stuff to which inspiration is the last word that one could apply.

All too often, Turner implies, Bach leaned on his technical facility when inspiration failed or he simply felt indifferent to the material: “The music shows no sign of Bach’s imagination having been fired at all; the old Leipzig Cantor simply took up his pen and reeled off this chorus as any master craftsman might polish off a ticklish job in the course of a day’s work.”

I first encountered the Turner quotation in The New Listener’s Companion and Record Guide by B.H. Haggin, who cites his fellow critic approvingly and adds: “This seems to me an excellent description of the essential fact about Bach—that one hears always the operation of prodigious powers of invention and construction, but frequently an operation that is not as expressive as it is accomplished.” Haggin continues:

Listening to the six sonatas or partitas for unaccompanied violin, the six sonatas or suites for unaccompanied piano, one is aware of Bach’s success with the difficult problem he set himself, of contriving for the instrument a melody that would imply its underlying harmonic progressions between the occasional chords. But one is aware also that solving this problem was not equivalent to writing great or even enjoyable music…I hear only Bach’s craftsmanship going through the motions of creation and producing the external appearances of expressiveness. And I suspect that it is the name of Bach that awes listeners into accepting the appearance as reality, into hearing an expressive content which isn’t there, and into believing that if the content is difficult to hear, this is only because it is especially profound—because it is “the passionate, yet untroubled meditation of a great mind” that lies beyond “the composition’s formidable technical frontiers.”

Haggins confesses that he regards many pieces in The Goldberg Variations or The Well-Tempered Clavier as “examples of competent construction that are, for me, not interesting pieces of music.” And he sums up: “Bach’s way of exercising the spirit was to exercise his craftsmanship; and some of the results offer more to delight an interest in the skillful use of technique than to delight the spirit.”