Posts Tagged ‘Slate’

A Geodesic Life

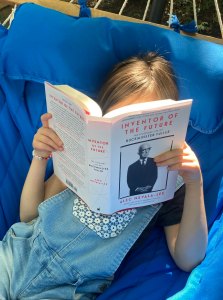

After three years of work—and more than a few twists and turns—my latest book, Inventor of the Future: The Visionary Life of Buckminster Fuller, is finally here. I think it’s the best thing that I’ve ever done, or at least the one book that I’m proudest to have written. After last week’s writeup in The Economist, a nice review ran this morning in the New York Times, which is a dream come true, and you can check out excerpts today at Fast Company and Slate. (At least one more should be running this weekend in The Daily Beast.) If you want to hear more about it from me, I’m doing a virtual event today sponsored by the Buckminster Fuller Institute, and on Saturday August 13, I’ll be holding a discussion at the Oak Park Public Library with Sarah Holian of the Frank Lloyd Wright Trust, which will be also be available to view online. There’s a lot more to say here, and I expect to keep talking about Fuller for the rest of my life, but for now, I’m just delighted and relieved to see it out in the world at last.

Running it up the flagpole

Two months ago, I was browsing Reddit when I stumbled across a post that said: “TIL that the current American Flag design originated as a school project from Robert G Heft, who received a B- for lack of originality, yet was promised an A if he successfully got it selected as the national flag. The design was later chosen by President Eisenhower for the national flag of the US.” After I read the submission, which received more than 21,000 upvotes, I was skeptical enough of the story to dig deeper. The result is my article “False Flag,” which appeared today in Slate. I won’t spoil it here, but rest assured that it’s a wild ride, complete with excursions into newspaper archives, government documents, and the world of vexillology, or flag studies. It’s probably my most surprising discovery in a lifetime of looking into unlikely subjects, and I have a hunch that there might be even more to come. I hope you’ll check it out.

The Rover Boys in the Air

On September 3, 1981, a man who had recently turned seventy reminisced in a letter to a librarian about his favorite childhood books, which he had read in his youth in Dixon, Illinois:

I, of course, read all the books that a boy that age would like—The Rover Boys; Frank Merriwell at Yale; Horatio Alger. I discovered Edgar Rice Burroughs and read all the Tarzan books. I am amazed at how few people I meet today know that Burroughs also provided an introduction to science fiction with John Carter of Mars and the other books that he wrote about John Carter and his frequent trips to the strange kingdoms to be found on the planet Mars.

At almost exactly the same time, a boy in Kansas City was working his way through a similar shelf of titles, as described by one of his biographers: “Like all his friends, he read the Rover Boys series and all the Horatio Alger books…[and] Edgar Rice Burroughs’s wonderful and exotic Mars books.” And a slightly younger member of the same generation would read many of the same novels while growing up in Brooklyn, as he recalled in his memoirs: “Most important of all, at least to me, were The Rover Boys. There were three of them—Dick, Tom, and Sam—with Tom, the middle one, always described as ‘fun-loving.’”

The first youngster in question was Ronald Reagan; the second was Robert A. Heinlein; and the third was Isaac Asimov. There’s no question that all three men grew up reading many of the same adventure stories as their contemporaries, and Reagan’s apparent fondness for science fiction has inspired a fair amount of speculation. In a recent article on Slate, Kevin Bankston retells the famous story of how WarGames inspired the president to ask his advisors about the likelihood of such an incident occurring for real, concluding that it was “just one example of how science fiction influenced his administration and his life.” The Day the Earth Stood Still, which was adapted from a story by Harry Bates that originally appeared in Astounding, allegedly influenced Regan’s interest in the potential effect of extraterrestrial contact on global politics, which he once brought up with Gorbachev. And in the novelistic biography Dutch, Edmund Morris—or his narrative surrogate—ruminates at length on the possible origins of the Strategic Defense Initiative:

Long before that, indeed, [Reagan] could remember the warring empyrean of his favorite boyhood novel, Edgar Rice Burroughs’s Princess of Mars. I keep a copy on my desk: just to flick through it is to encounter five-foot-thick polished glass domes over cities, heaven-filling salvos, impregnable walls of carborundum, forts, and “manufactories” that only one man with a key can enter. The book’s last chapter is particularly imaginative, dominated by the magnificent symbol of a civilization dying for lack of air.

For obvious marketing reasons, I’d love to be able to draw a direct line between science fiction and the Reagan administration. Yet it’s also tempting to read a greater significance into these sorts of connections than they actually deserve. The story of science fiction’s role in the Strategic Defense Initiative has been told countless times, but usually by the writers themselves, and it isn’t clear what impact it truly had. (The definitive book on the subject, Way Out There in the Blue by Frances FitzGerald, doesn’t mention any authors at all by name, and it refers only once, in passing, to a group of advisors that included “a science fiction writer.” And I suspect that the most accurate description of their involvement appears in a speech delivered by Greg Bear: “Science fiction writers helped the rocket scientists elucidate their vision and clarified it.”) Reagan’s interest in science fiction seems less like a fundamental part of his personality than like a single aspect of a vision that was shaped profoundly by the popular culture of his young adulthood. The fact that Reagan, Heinlein, and Asimov devoured many of the same books only tells me that this was what a lot of kids were reading in the twenties and thirties—although perhaps only the exceptionally imaginative would try to live their lives as an extension of those stories. If these influences were genuinely meaningful, we should also be talking about the Rover Boys, a series “for young Americans” about three brothers at boarding school that has now been almost entirely forgotten. And if we’re more inclined to emphasize the science fiction side for Reagan, it’s because this is the only genre that dares to make such grandiose claims for itself.

In fact, the real story here isn’t about science fiction, but about Reagan’s gift for appropriating the language of mainstream culture in general. He was equally happy to quote Dirty Harry or Back to the Future, and he may not even have bothered to distinguish between his sources. In Way Out There in the Blue, FitzGerald brilliantly unpacks a set of unscripted remarks that Reagan made to reporters on March 24, 1983, in which he spoke of the need of rendering nuclear weapons “obsolete”:

There is a part of a line from the movie Torn Curtain about making missiles “obsolete.” What many inferred from the phrase was that Reagan believed what he had once seen in a science fiction movie. But to look at the explanation as a whole is to see that he was following a train of thought—or simply a trail of applause lines—from one reassuring speech to another and then appropriating a dramatic phrase, whose origin he may or may not have remembered, for his peroration.

Take out the world “reassuring,” and we have a frightening approximation of our current president, whose inner life is shaped in real time by what he sees on television. But we might feel differently if those roving imaginations had been channeled by chance along different lines—like a serious engagement with climate change. It might just as well have gone that way, but it didn’t, and we’re still dealing with the consequences. As Greg Bear asks: “Do you want your presidents to be smart? Do you want them to be dreamers? Or do you want them to be lucky?”

Science for the people

“My immediate reaction was one of intense loss,” Fern MacDougal, a graduate student in ecology, says in the short documentary “Science for the People.” She’s referring to the experience of speaking with the founders of the organization of the same name, which was formed in the late sixties to mobilize scientists and engineers for political change, and which recently returned after a long hiatus. As Rebecca Onion elaborates in an article on Slate:

In some areas—climate, reproductive justice—our situation has become even more perilous now than it was then. Biological determinism has a stubborn way of cropping up again and again in public discourse…Then there’s the sad reality that the very basic twentieth-century concept that science is helpful in public life because it helps us make evidence-based decisions is increasingly threatened under Trump. “It feels as though we’re fighting like heck to defend what would have been ridiculous to think we had to defend, back in those old days,” [biologist Katherine] Yih said. “It was just so obvious that science has that capability to improve the quality of life for people, even if it was often being used for militaristic purposes and so forth. But the notion that we had to defend science against our government was just—it wouldn’t have been imaginable, I think.”

Science for the People has since been revived as a nonprofit organization and an online magazine, and its presence now is necessary and important. But it also feels sad to reflect on the fact that we need it again, nearly half a century after it was originally founded.

In the oral history The World Only Spins Forward, Tony Kushner is asked what he would tell himself at the age of twenty-nine, when he was just commencing work on the play that became Angels in America. His response is both revealing and sobering:

What a tough time to ask that question…I don’t think that I would talk to him. I think it’s better that we don’t know the future. The person that I was at twenty-nine very deeply believed that there would be great progress. I believed back then, with great certainty—I mean I wrote it in the play, “the world only spins forward,” “the time has come,” et cetera, et cetera…Working on all this stuff right after Reagan had been reelected, it felt very dark. I’m glad that I didn’t know back then that at sixty I’d be looking at some of the same fucking fights that I was looking at at twenty-nine.

And one of the great, essential, difficult things about this book is the glimpse it provides of a time that briefly felt like a turning point. One of its most memorable passages is simply a quote from a review by Steven Mikulan of L.A. Weekly: “Tony Kushner’s epic play about the death of the twentieth century has arrived at the very pivot of American history, when the Republican ice age it depicts has begun to melt away.” This was at the end of 1992, when it was still possible to look ahead with relief to the end of the administration of George H.W. Bush.

It’s no longer possible to believe, in other words, that the world only spins forward. The fact that recent events have coincided with the fiftieth anniversary of so many milestones from 1968 may just be a quirk of the calendar, but it also underlines the feeling that history is repeating itself—or rhyming—in the worst possible way. It forces us to contemplate the possibility that any trend in favor of liberal values over the last half century may have been an illusory blip, and that we’re experiencing a correction back to the way human society has nearly always been. (And it isn’t an accident that Francis Fukuyama, who famously proclaimed the end of history and the triumph of western culture in the early nineties, has a new book out that purports to explain what happened instead. Its title is Identity.) But I can think of two possible forms of consolation. The first is that this is a necessary reawakening to the nature of history itself, which we need to acknowledge in order to deal with it. One of the major influences on Angels in America was the work of the philosopher Walter Benjamin, of whom Kushner’s friend and dramaturge Kimberly Flynn observes:

According to Benjamin, “One reason fascism has a chance is that in the name of progress its opponents treat it as a historical norm,” a developmental phase on the way to something better. Opposing this notion, Benjamin wrote, “The tradition of the oppressed teaches us that ‘the state of emergency’ in which we live is not the exception but the rule.” This is the insight that should inform the conception of history. This, not incidentally, is the way in which ACT UP, operating in the tradition of the oppressed, understood the emergency of AIDS.

Which brings us to the second source of consolation, which is that we’ve been through many of the same convulsions before, and we can learn from the experiences of our predecessors—which in itself amount to a sort of science for the people. (Alfred Korzybski called it time binding, or the unique ability of the human species to build on the discoveries of earlier generations.) We can apply their lessons to matters of survival, of activism, of staying sane. A few years ago, I would have found it hard to remember that many of the men and women I admire most lived through times in which there was no guarantee that everything would work out, and in which the threat of destruction or reversion hung threateningly over every incremental step forward. Viewers who saw 2001: A Space Odyssey on its original release emerged from the theater into a world that seemed that it might blow apart at any moment. For many of them, it did, just as it did for those who were lost in the emergency that produced Angels in America, whom one character imagines as “souls of the dead, people who had perished,” floating up to heal the hole in the ozone layer. It’s an unforgettable image, and I like to take it as a metaphor for the way in which a culture forged in a crisis can endure for those who come afterward. Many of us are finally learning, in a limited way, how it feels to live with the uncertainty that others have felt for as long as they can remember, and we can all learn from the example of Prior Walter, who says to himself at the end of Millennium Approaches, as he hears the thunder of approaching wings: “My brain is fine, I can handle pressure, I am a gay man and I am used to pressure.” It’s true that the world only spins forward. But it can also bring us back around to where we were before.

Subterranean fact check blues

In Jon Ronson’s uneven but worthwhile book So You’ve Been Publicly Shamed, there’s a fascinating interview with Jonah Lehrer, the superstar science writer who was famously hung out to dry for a variety of scholarly misdeeds. His troubles began when a journalist named Michael C. Moynihan noticed that six quotes attributed to Bob Dylan in Lehrer’s Imagine appeared to have been fabricated. Looking back on this unhappy period, Lehrer blames “a toxic mixture of insecurity and ambition” that led him to take shortcuts—a possibility that occurred to many of us at the time—and concludes:

And then one day you get an email saying that there’s these…Dylan quotes, and they can’t be explained, and they can’t be found anywhere else, and you were too lazy, too stupid, to ever check. I can only wish, and I wish this profoundly, I’d had the temerity, the courage, to do a fact check on my last book. But as anyone who does a fact check knows, they’re not particularly fun things to go through. Your story gets a little flatter. You’re forced to grapple with all your mistakes, conscious and unconscious.

There are at least two striking points about this moment of introspection. One is that the decision whether or not to fact-check a book was left to the author himself, which feels like it’s the wrong way around, although it’s distressingly common. (“Temerity” also seems like exactly the wrong word here, but that’s another story.) The other is that Lehrer avoided asking someone to check his facts because he saw it as a painful, protracted process that obliged him to confront all the places where he had gone wrong.

He’s probably right. A fact check is useful in direct proportion to how much it hurts, and having just endured one recently for my article on L. Ron Hubbard—a subject on whom no amount of factual caution is excessive—I can testify that, as Lehrer says, it isn’t “particularly fun.” You’re asked to provide sources for countless tiny statements, and if you can’t find it in your notes, you just have to let it go, even if it kills you. (As far as I can recall, I had to omit exactly one sentence from the Hubbard piece, on a very minor point, and it still rankles me.) But there’s no doubt in my mind that it made the article better. Not only did it catch small errors that otherwise might have slipped into print, but it forced me to go back over every sentence from another angle, critically evaluating my argument and asking whether I was ready to stand by it. It wasn’t fun, but neither are most stages of writing, if you’re doing it right. In a couple of months, I’ll undergo much the same process with my book, as I prepare the endnotes and a bibliography, which is the equivalent of my present self performing a fact check on my past. This sort of scholarly apparatus might seem like a courtesy to the reader, and it is, but it’s also good for the book itself. Even Lehrer seems to recognize this, stating in his attempt at an apology in a keynote speech for the Knight Foundation:

If I’m lucky enough to write again, I won’t write a thing that isn’t fact-checked and fully footnoted. Because here is what I’ve learned: unless I’m willing to continually grapple with my failings—until I’m forced to fix my first draft, and deal with criticism of the second, and submit the final for a good, independent scrubbing—I won’t create anything worth keeping around.

For a writer whose entire brand is built around counterintuitive, surprising insights, this realization might seem bluntly obvious, but it only speaks to how resistant most writers, including me, are to any kind of criticism. We might take it better if we approached it with the notion that it isn’t simply for the sake of our readers, or our hypothetical critics, or even the integrity of the subject matter, but for ourselves. A footnote lurking in the back of the book makes for a better sentence on the page, if only because of the additional pass that it requires. It would help if we saw such standards—the avoidance of plagiarism, the proper citation of sources—not as guidelines imposed by authority from above, but as a set of best practices that well up from inside the work itself. A few days ago, there yet was another plagiarism controversy, which, in what Darin Morgan once called “one of those coincidences found only in real life and great fiction,” also involved Bob Dylan. As Andrea Pitzer of Slate recounts it:

During his official [Nobel] lecture recorded on June 4, laureate Bob Dylan described the influence on him of three literary works from his childhood: The Odyssey, All Quiet on the Western Front, and Moby-Dick. Soon after, writer Ben Greenman noted that in his lecture Dylan seemed to have invented a quote from Moby-Dick…I soon discovered that the Moby-Dick line Dylan dreamed up last week seems to be cobbled together out of phrases on the website SparkNotes, the online equivalent of CliffsNotes…Across the seventy-eight sentences in the lecture that Dylan spends describing Moby-Dick, even a cursory inspection reveals that more than a dozen of them appear to closely resemble lines from the SparkNotes site.

Without drilling into it too deeply, I’ll venture to say that if this all seems weird, it’s because Bob Dylan, of all people, after receiving the Nobel Prize for Literature, might have cribbed statements from an online study guide written by and for college students. But isn’t that how it always goes? Anecdotally speaking, plagiarists seem to draw from secondary or even tertiary sources, like encyclopedias, since the sort of careless or hurried writer vulnerable to indulging in it in the first place isn’t likely to grapple with the originals. The result is an inevitable degradation of information, like a copy of a copy. As Edward Tufte memorably observes in Visual Explanations: “Incomplete plagiarism leads to dequantification.” In context, he’s talking about the way in which illustrations and statistical graphics tend to lose data the more often they get copied. (In The Visual Display of Quantitative Information, he cites a particularly egregious example, in which a reproduction of a scatterplot “forgot to plot the points and simply retraced the grid lines from the original…The resulting figure achieves a graphical absolute zero, a null data-ink ratio.”) But it applies to all kinds of plagiarism, and it makes for a more compelling argument, I think, than the equally valid point that the author is cheating the source and the reader. In art or literature, it’s better to argue from aesthetics than ethics. If fact-checking strengthens a piece of writing, then plagiarism, with its effacing of sources and obfuscation of detail, can only weaken it. One is the opposite of the other, and it’s no surprise that the sins of plagiarism and fabrication tend to go together. They’re symptoms of the same underlying sloppiness, and this is why writers owe it to themselves—not to hypothetical readers or critics—to weed them out. A writer who is sloppy on small matters of fact can hardly avoid doing the same on the higher levels of an argument, and policing the one is a way of keeping an eye on the other. It isn’t always fun. But if you’re going to be a writer, as Dylan himself once said: “Now you’re gonna have to get used to it.”

Avocado’s number

Earlier this month, you may have noticed a sudden flurry of online discussion around avocado toast. It was inspired by a remark by a property developer named Tim Gurner, who said to the Australian version of 60 Minutes: “When I was trying to buy my first home, I wasn’t buying smashed avocados for nineteen bucks and four coffees at four dollars each.” Gurner’s statement, which was fairly bland and unmemorable in itself, was promptly transformed into the headline “Millionaire to Millennials: Stop Buying Avocado Toast If You Want to Buy a Home.” From there, it became the target of widespread derision, with commentators pointing out that if owning a house seems increasingly out of reach for many young people, it has more to do with rising real estate prices, low wages, and student loans than with their irresponsible financial habits. And the fact that such a forgettable sentiment became the focal point for so much rage—mostly from people who probably didn’t see the original interview—implies that it merely catalyzed a feeling that had been building for some time. Millennials, it’s fair to say, have been getting it from both sides. When they try to be frugal by using paper towels as napkins, they’re accused of destroying the napkin industry, but they’re also scolded over spending too much at brunch. They’re informed that their predicament is their own fault, unless they’re also being idealized as “joyfully engaged in a project of creative destruction,” as Laura Marsh noted last year in The New Republic. “There’s nothing like being told precarity is actually your cool lifestyle choice,” Marsh wrote, unless it’s being told, as the middle class likes to maintain to the poor, that financial stability is only a matter of hard work and a few small sacrifices.

It also reflects an overdue rejection of what used to be called the latte factor, as popularized by the financial writer David Bach in such books as Smart Women Finish Rich. As Helaine Olen writes in Slate:

Bach calculated that eschewing a five-dollar daily bill at Starbucks—because who, after all, really needs anything at Starbucks?—for a double nonfat latte and biscotti with chocolate could net a prospective saver $150 a month, or $2,000 a year. If she then took that money and put it all in stocks that Bach, ever an optimist, assumed would grow at an average annual rate of eleven percent a year, “chances are that by the time she reached sixty-five, she would have more than $2 million sitting in her account.”

There are a lot of flaws in this argument. Bach rounds up his numbers, assumes an unrealistic rate of return, and ignores taxes and inflation. Most problematic of all is his core assumption that tiny acts of indulgence are what prevent the average investor from accumulating wealth. In fact, big, unpredictable risk factors and fixed expenses play a much larger role, as Olen points out:

Buying common luxury items wasn’t the issue for most Americans. The problem was the fixed costs, the things that are difficult to cut back on. Housing, health care, and education cost the average family seventy-five percent of their discretionary income in the 2000s. The comparable figure in 1973: fifty percent. Indeed, studies demonstrate that the quickest way to land in bankruptcy court was not by buying the latest Apple computer but through medical expenses, job loss, foreclosure, and divorce.

It turns out that incremental acts of daily discipline are powerless in the face of systemic factors that have a way of erasing all your efforts—and this applies to more than just personal finance. Back when I was trying to write my first novel, I was struck by the idea that if I managed to write just one hundred words every day, I’d have a manuscript in less than three years. I was so taken by this notion that I wrote it down on an index card and stuck it to my bathroom mirror. That was over a decade ago, and while I can’t quite remember how long I stuck with that regimen, it couldn’t have been more than a few weeks. Novels, I discovered, aren’t written a hundred words at a time, at least not in a fashion that can be banked in the literary equivalent of a penny jar. They’re the product of hard work combined with skills that can only be developed after a period of sustained engagement. There’s a lot of trial and error involved, and you can only arrive at a workable system through the kind of experience that comes from addressing issues of craft with maximal attention. Luck and timing also play a role, particularly when it comes navigating the countless pitfalls that lie between a finished draft and its publication. In finance, we’re inclined to look at a historical return series and attribute it after the fact to genius, rather than to variables that are out of our hands. Similarly, every successful novel creates its own origin story. We naturally underestimate the impact of factors that can’t be credited to individual initiative and discipline. As a motivational tool, there’s a place for this kind of myth. But if novels were written using the literary equivalent of the latte factor, we’d have more novels, just as we’d have more millionaires.

Which isn’t to say that routine doesn’t play a crucial role. My favorite piece of writing advice ever is what David Mamet writes in Some Freaks:

As a writer, I’ve tried to train myself to go one achievable step at a time: to say, for example, “Today I don’t have to be particularly inventive, all I have to be is careful, and make up an outline of the actual physical things the character does in Act One.” And then, the following day to say, “Today I don’t have to be careful. I already have this careful, literal outline, and I all have to do is be a little bit inventive,” et cetera, et cetera.

A lot of writing comes down to figuring out what to do on any given morning—but it doesn’t mean doing the same thing each day. Knowing what achievable steps are appropriate at every stage is as important here as it is anywhere else. You can acquire this knowledge as systematically or haphazardly as you like, but you can also do everything right and still fail in the end. (If we define failure as spending years on a novel that will never be published, it’s practically a requirement of the writer’s education.) Books on writing and personal finance continue to take up entire shelves at bookstores, and they can sound very much alike. In “The Writer’s Process,” a recent, and unusually funny, humor piece in The New Yorker, Hallie Cantor expertly skewers their tone—“I give myself permission to write a clumsy first draft and vigorously edit it later”—and concludes: “Anyway, I guess that’s my process. It’s all about repetition, really—doing the same thing every single day.” We’ve all heard this advice. I’ve been guilty of it myself. But when you don’t take the big picture into account, it’s just a load of smashed avocado.

The Berenstain Barrier

If you’ve spent any time online in the last few years, there’s a decent chance that you’ve come across some version of what I like to call the Berenstain Bears enigma. It’s based on the fact that a sizable number of readers who recall this book series from childhood remember the name of its titular family as “Berenstein,” when in reality, as a glance at any of the covers will reveal, it’s “Berenstain.” As far as mass instances of misremembering are concerned, this isn’t particularly surprising, and certainly less bewildering than the Mandela effect, or the similar confusion surrounding a nonexistent movie named Shazam. But enough people have been perplexed by it to inspire speculation that these false memories may be the result of an errant time traveler, à la Asimov’s The End of Eternity, or an event in which some of us crossed over from an alternate universe in which the “Berenstein” spelling was correct. (If the theory had emerged a few decades earlier, Robert Anton Wilson might have devoted a page or two to it in Cosmic Trigger.) Even if we explain it as an understandable, if widespread, mistake, it stands as a reminder of how an assumption absorbed in childhood remains far more powerful than a falsehood learned later on. If we discover that we’ve been mispronouncing, say, “Steve Buscemi” for all this time, we aren’t likely to take it as evidence that we’ve ended up in another dimension, but the further back you go, the more ingrained such impressions become. It’s hard to unlearn something that we’ve believed since we were children—which indicates how difficult it can be to discard the more insidious beliefs that some of us are taught from the cradle.

But if the Berenstain Bears enigma has proven to be unusually persistent, I suspect that it’s because many of us really are remembering different versions of this franchise, even if we believe that we aren’t. (You could almost take it as a version of Hilary Putnam’s Twin Earth thought experiment, which asks if the word “water” means the same thing to us and to the inhabitants of an otherwise identical planet covered with a similar but different liquid.) As I’ve recently discovered while reading the books aloud to my daughter, the characters originally created by Stan and Jan Berenstain have gone through at least six distinct incarnations, and your understanding of what this series “is” largely depends on when you initially encountered it. The earliest books, like The Bike Lesson or The Bears’ Vacation, were funny rhymed stories in the Beginner Book style in which Papa Bear injures himself in various ways while trying to teach Small Bear a lesson. They were followed by moody, impressionistic works like Bears in the Night and The Spooky Old Tree, in which the younger bears venture out alone into the dark and return safely home after a succession of evocative set pieces. Then came big educational books like The Bears’ Almanac and The Bears’ Nature Guide, my own favorites growing up, which dispensed scientific facts in an inviting, oversized format. There was a brief detour through stories like The Berenstain Bears and the Missing Dinosaur Bone, which returned to the Beginner Book format but lacked the casually violent gags of the earlier installments. Next came perhaps the most famous period, with dozens of books like Trouble With Money and Too Much TV, all written, for the first time, in prose, and ending with a tidy, if secular, moral. Finally, and jarringly, there was an abrupt swerve into Christianity, with titles like God Loves You and The Berenstain Bears Go to Sunday School.

To some extent, you can chalk this up to the noise—and sometimes the degeneration—that afflicts any series that lasts for half a century. Incremental changes can lead to radical shifts in style and tone, and they only become obvious over time. (Peanuts is the classic example, but you can even see it in the likes of Dennis the Menace and The Family Circus, both of which were startlingly funny and beautifully drawn in their early years.) Fashions in publishing can drive an author’s choices, which accounts for the ups and downs of many a long career. And the bears only found Jesus after Mike Berenstain took over the franchise after the deaths of his parents. Yet many critics don’t bother making these distinctions, and the ones who hate the Berenstain Bears books seem to associate them entirely with the Trouble With Money period. In 2005, for instance, Paul Farhi of the Washington Post wrote:

The larger questions about the popularity of the Berenstain Bears are more troubling: Is this what we really want from children’s books in the first place, a world filled with scares and neuroses and problems to be toughed out and solved? And if it is, aren’t the Berenstain Bears simply teaching to the test, providing a lesson to be spit back, rather than one lived and understood and embraced? Where is the warmth, the spirit of discovery and imagination in Bear Country? Stan Berenstain taught a million lessons to children, but subtlety and plain old joy weren’t among them.

Similarly, after Jan Berenstain died, Hanna Rosin of Slate said: “As any right-thinking mother will agree, good riddance. Among my set of mothers the series is known mostly as the one that makes us dread the bedtime routine the most.”

Which only tells me that neither Farhi or Rosin ever saw The Spooky Old Tree, which is a minor masterpiece—quirky, atmospheric, gorgeously rendered, and utterly without any lesson. It’s a book that I look forward to reading with my daughter. And while it may seem strange to dwell so much on these bears, it gets at a larger point about the pitfalls in judging any body of work by looking at a random sampling. I think that Peanuts is one of the great artistic achievements of the twentieth century, but it would be hard to convince anyone who was only familiar with its last two decades. You can see the same thing happening with The Simpsons, a series with six perfect seasons that threaten to be overwhelmed by the mediocre decades that are crowding the rest out of syndication. And the transformations of the Berenstain Bears are nothing compared to those of Robert A. Heinlein, whose career somehow encompassed Beyond This Horizon, Have Spacesuit—Will Travel, Starship Troopers, Stranger in a Strange Land, and I Will Fear No Evil. Yet there are also risks in drawing conclusions from the entirety of an artist’s output. In his biography of Anthony Burgess, Roger Lewis notes that he has read through all of Burgess’s work, and he asks parenthetically: “And how many have done that—except me?” He’s got a point. Trying to internalize everything, especially over a short period of time, can provide as false a picture as any subset of the whole, and it can result in a pattern that not even the author or the most devoted fan would recognize. Whether or not we’re from different universes, my idea of Bear Country isn’t the same as yours. That’s true of any artist’s work, and it hints at the problem at the root of all criticism: What do we talk about when we talk about the Berenstain Bears?

The two kinds of commentaries

There are two sorts of commentary tracks. The first kind is recorded shortly after a movie or television season is finished, or even while it’s still being edited or mixed, and before it comes out in theaters. Because their memories of the production are still vivid, the participants tend to be a little giddy, even punch drunk, and their feelings about the movie are raw: “The wound is still open,” as Jonathan Franzen put it to Slate. They don’t have any distance, and they remember everything, which means that they can easily get sidetracked into irrelevant detail. They don’t yet know what is and isn’t important. Most of all, they don’t know how the film did with viewers or critics, so their commentary becomes a kind of time capsule, sometimes laden with irony. The second kind of commentary is recorded long after the fact, either for a special edition, for the release of an older movie in a new format, or for a television series that is catching up with its early episodes. These tend to be less predictable in quality: while commentaries on recent work all start to sound more or less the same, the ones that reach deeper into the past are either disappointingly superficial or hugely insightful, without much room in between. Memories inevitably fade with time, but this can also allow the artist to be more honest about the result, and the knowledge of how the work was ultimately received adds another layer of interest. (For instance, one of my favorite commentaries from The Simpsons is for “The Principal and the Pauper,” with writer Ken Keeler and others ranting against the fans who declared it—preemptively, it seems safe to say—the worst episode ever.)

Perhaps most interesting of all are the audio commentaries that begin as the first kind, but end up as the second. You can hear it on the bonus features for The Lord of the Rings, in which, if memory serves, Peter Jackson and his cowriters start by talking about a movie that they finished years ago, continue by discussing a movie that they haven’t finished editing yet, and end by recording their comments for The Return of the King after it won the Oscar for Best Picture. (This leads to moments like the one for The Two Towers in which Jackson lays out his reasoning for pushing the confrontation with Saruman to the next movie—which wound up being cut for the theatrical release.) You also see it, on a more modest level, on the author’s commentaries I’ve just finished writing for my three novels. I began the commentary on The Icon Thief way back on April 30, 2012, or less than two months after the book itself came out. At the time, City of Exiles was still half a year away from being released, and I was just beginning the first draft of the novel that I still thought would be called The Scythian. I had a bit of distance from The Icon Thief, since I’d written a whole book and started another in the meantime, but I was still close enough that I remembered pretty much everything from the writing process. In my earliest posts, you can sense me trying to strike the right balance between providing specific anecdotes about the novel itself to offering more general thoughts on storytelling, while using the book mostly as a source of examples. And I eventually reached a compromise that I hoped would allow those who had actually read the book to learn something about how it was put together, while still being useful to those who hadn’t.

As a result, the commentaries began to stray further from the books themselves, usually returning to the novel under discussion only in the final paragraph. I did this partly to keep the posts accessible to nonreaders, but also because my own relationship with the material had changed. Yesterday, when I posted the last entry in my commentary on Eternal Empire, almost four years had passed since I finished the first draft of that novel. Four years is a long time, and it’s even longer in writing terms. If every new project puts a wall between you and the previous one, a series of barricades stands between these novels and me: I’ve since worked on a couple of book-length manuscripts that never got off the ground, a bunch of short stories, a lot of occasional writing, and my ongoing nonfiction project. With each new endeavor, the memory of the earlier ones grows dimmer, and when I go back to look at Eternal Empire now, not only do I barely remember writing it, but I’m often surprised by my own plot. This estrangement from a work that consumed a year of my life is a little sad, but it’s also unavoidable: you can’t keep all this information in your head and still stay sane. Amnesia is a coping strategy. We’re all programmed to forget many of our experiences—as well as our past selves—to free up capacity for the present. A novel is different, because it exists in a form outside the brain. Any book is a piece of its writer, and it can be as disorienting to revisit it as it is to read an old diary. As François Mauriac put it: “It is as painful as reading old letters…We touch it like a thing: a handful of ashes, of dust.” I’m not quite at that point with Eternal Empire, but I’ll sometimes read a whole series of chapters and think to myself, where did that come from?

Under the circumstances, I should count myself lucky that I’m still reasonably happy with how these novels turned out, since I have no choice but to be objective about it. There are things that I’d love to change, of course: sections that run too long, others that seem underdeveloped, conceits that seem too precious or farfetched or convenient. At times, I can see myself taking the easy way out, going with a shortcut or ignoring a possible implication because I lacked the time or energy to do it justice. (I don’t necessarily regret this: half of any writing project involves conserving your resources for when it really matters.) But I’m also surprised by good ideas or connections that seem to have come from outside of me, as if, to use Isaac Asimov’s phrase, I were writing over my own head. Occasionally, I’ll have trouble following my own logic, and the result is less a commentary than a forensic reconstruction of what I must have been thinking at the time. But if I find it hard to remember my reasoning today, it’s easier now than it will be next year, or after another decade. As I suspected at the time, the commentary exists more for me than for anybody else. It’s where I wrote down my feelings about a series of novels that once dominated my life, and which now seem like a distant memory. While I didn’t devote nearly as many hours to these commentaries as I did to the books themselves, they were written over a comparable stretch of time. And now that I’ve gotten to the point of writing a commentary on my commentary—well, it’s pretty clear that it’s time to stop.

Love and research

I’ve expressed mixed feelings about Jonathan Franzen before, but I don’t think there’s any doubt about his talent, or about his ability to infuriate readers in just the right way. His notorious essay on climate change in The New Yorker still irritates me, but it prompted me to think deeply on the subject, if only to articulate why I thought he was wrong. But Franzen isn’t a deliberate provocateur, like Norman Mailer was: instead, he comes across as a guy with deeply felt, often conflicted opinions, and he expresses them as earnestly as he can, even if he knows he’ll get in trouble for it. Recently, for instance, he said the following to Isaac Chotiner of Slate, in response to a question about whether he could ever write a book about race:

I have thought about it, but—this is an embarrassing confession—I don’t have very many black friends. I have never been in love with a black woman. I feel like if I had, I might dare…Didn’t marry into a black family. I write about characters, and I have to love the character to write about the character. If you have not had direct firsthand experience of loving a category of person—a person of a different race, a profoundly religious person, things that are real stark differences between people—I think it is very hard to dare, or necessarily even want, to write fully from the inside of a person.

It’s quite a statement, and it comes right at the beginning of the interview, before either Franzen or Chotiner have had a chance to properly settle in. Not surprisingly, it has already inspired a fair amount of snark online. But Franzen is being very candid here in ways that most novelists wouldn’t dare, and he deserves credit for it, even if he puts it in a way that is likely to make us uncomfortable. The question of authors writing about other races is particularly fraught, and the practical test that Franzen proposes is a better entry point than most. We shouldn’t discourage writers from imagining themselves into the lives of characters of different backgrounds, but we can insist on setting a high bar. (I’m talking mostly about literary fiction, by the way, which works hard to enter the consciousness of a protagonist or a society, and not necessarily about the ordinary diversity that I like to see in popular fiction, in which writers can—and often should—make the races of the characters an unobtrusive element in the story.) We could say, for instance, that a novel about race should be conceived from the inside out, rather than the outside in, and that it demands a certain intensity of experience and understanding to justify itself. Given the number of minority authors who are amply qualified to write about these issues firsthand, an outsider needs to earn the right to engage with the subject, and this requires something beyond well-intentioned concern. As Franzen rightly says in the same interview: “I feel it’s really dangerous, if you are a liberal white American, to presume that your good intentions are enough to embark on a work of imagination about black America.”

And Franzen’s position becomes easier to understand when framed within his larger concerns about research itself. As he once told The Guardian: “When information becomes free and universally accessible, voluminous research for a novel is devalued along with it.” Yet like just about everything Franzen says, this seemingly straightforward rule is charged with a kind of reflexive uneasiness, because he’s among the most obsessive of researchers. His novels are full of lovingly rendered set pieces that were obviously researched with enormous diligence, and sometimes they call attention to themselves, as Norman Mailer unkindly but accurately noted of The Corrections:

Everything of novelistic use to him that came up on the Internet seems to have bypassed the higher reaches of his imagination—it is as if he offers us more human experience than he has literally mastered, and this is obvious when we come upon his set pieces on gourmet restaurants or giant cruise ships or modern Lithuania in disarray. Such sections read like first-rate magazine pieces, but no better—they stick to the surface.

For a writer like Franzen, whose novels are ambitious attempts to fit everything he can within two covers, research is part of the game. But it’s also no surprise that the novelist who has tried the hardest to bring research back into mainstream literary fiction should also be the most agonizingly aware of its limitations.

These limitations are particularly stark when it comes to race, which, more than any other theme, demands to be lived and felt before it can be written. And if Franzen shies away from it with particular force, it’s because the set of skills that he has employed so memorably elsewhere is rendered all but useless here. It’s wise of him to acknowledge this, and he sets forth a useful test for gauging a writer’s ability to engage the subject. He writes:

In the case of Purity, I had all this material on Germany. I had spent two and a half years there. I knew the literature fairly well, and I could never write about it because I didn’t have any German friends. The portal to being able to write about it was suddenly having these friends I really loved. And then I wasn’t the hostile outsider; I was the loving insider.

Research, he implies, takes you only so far, and love—defined as the love of you, the novelist, for another human being—carries you the rest of the way. Love becomes a kind of research, since it provides you with something like the painful vividness of empathy and feeling required to will yourself into the lives of others. Without talent and hard work, love isn’t enough, and it may not be enough even with talent in abundance. But it’s necessary, if not sufficient. And while it doesn’t tell us much about who ought to be writing about race, it tells us plenty about who shouldn’t.

The slow fade

Note: I’m on vacation this week, so I’ll be republishing a few of my favorite posts from earlier in this blog’s run. This post originally appeared, in a slightly different form, on September 16, 2014.

A while back, William Weir wrote an excellent piece in Slate about the decline of the fade-out in pop music, once ubiquitous, now nearly impossible to find. Of the top ten songs of 1985, every single one ended with a fade; in the three years before the article was written, there was only one, “Blurred Lines,” which in itself is a conscious homage to—or an outright plagiarism of—a much earlier model. Weir points to various possible causes for the fade’s disappearance, from the impatience of radio and iTunes listeners to advances in technology that allow producers to easily splice in a cold ending, and he laments the loss of the technique, which at its best produces an impression that a song never ends, but imperceptibly embeds itself into the fabric of the world beyond. (He also notes that a fade-out, more prosaically, can be used to conceal a joke or hidden message. One of my favorites, which he doesn’t mention, occurs in “Always On My Mind” by the Pet Shop Boys, which undermines itself with a nearly inaudible aside at the very end: “Maybe I didn’t love you…”)

The slow fade is a special case of what I’ve elsewhere called the Layla effect, in which a song creates an impression of transcendence or an extension into the infinite by the juxtaposition of two unrelated parts—although one of the few songs on that list that doesn’t end with a fade, interestingly, is “Layla” itself. As Weir points out, a proper fade involves more than just turning down the volume knob: it’s a miniature movement in its own right, complete with its own beginning, middle, and end, and it produces a corresponding shift in the listener’s mental state. He cites a fascinating study by the Hanover University of Music in Germany, which measured how long students tapped along to the rhythm of the same song in two different versions. When the song was given a cold ending, subjects stopped tapping an average of 1.4 seconds before the song was over, but with a fade-out, they continued to tap 1.04 seconds after the song ended, as if the song had somehow managed to extend itself beyond its own physical limits. As the Pet Shop Boys say elsewhere on Introspective, the music plays forever.

In some ways, then, a fade-out is the musical equivalent of the denouement in fiction, and it’s easy to draw parallels to different narrative strategies. A cold ending is the equivalent of the kind of abrupt close we see in many of Shakespeare’s tragedies, which rarely go on for long after the demise of the central character. (This may be due in part to the logistics of theatrical production: a scene change so close to the end would only sow confusion, and in the meantime, the leading actor is doing his best to lie motionless on the stage.) The false fade, in which a song like “Helter Skelter” pretends to wind down before abruptly ramping up again, has its counterpart in the false denouement, which we see in so many thrillers, perhaps most memorably in Thomas Harris’s Red Dragon. And the endless slow fade, which needs a long song like “Hey Jude” or “Dry the Rain” to sustain it, is reminiscent of the extended denouements in epic novels from War and Peace to The Lord of the Rings. The events of the epic wrench both the protagonist and reader out of everyday life, and after a thousand crowded pages, it takes time to settle us back into Bag End.

The fade, in short, is a narrative tool like any other, complete with its own rules and tricks of the trade. Weir quotes the sound engineer Jeff Rothschild, who says that in order for the fade to sound natural to a listener’s ear, the volume must “go down a little quicker at first, and then it’s a longer fade”—which is a strategy often employed in fiction, in which an abrupt conclusion to the central conflict is followed by a more gradual withdrawal. There are times, of course, when a sudden ending is what you want: Robert Towne himself admits that the original dying close of Chinatown isn’t as effective as the “simple severing of the knot” that Roman Polanski imposed. But it’s a mistake to neglect a tool both so simple and so insinuating. (A fade-in, which allows the song to edge gradually into our circle of consciousness, can create an equally haunting impression, as in Beethoven’s Ninth Symphony and one of my favorite deep cuts by the Beatles, George Harrison’s “I Want to Tell You.”) These days, we have a way of seeing songs as discrete items on a playlist, but they often work best if they’re allowed to spill over a bit to either side. An ending draws a line in the world, but sometimes it’s nice if it’s a little blurred.

The title search

Note: Every Friday, The A.V. Club, my favorite pop cultural site on the Internet, throws out a question to its staff members for discussion, and I’ve decided that I want to join in on the fun. This week’s topic: “What pop-culture title duplication do you find most annoying?”

At the thrift store up the street from my house, there’s a shelf that an enterprising employee has stocked with a selection of used books designed to make you look twice: The Time Traveler’s Wife, The Paris Wife, The Tiger’s Wife, A Reliable Wife, American Wife, The Zookeeper’s Wife, and probably some others I’ve forgotten. It’s funny to see them lined up in a row, but there’s a reason this formula is so popular: with an average of just three words, it gives us the germ of a story—at least in terms of its central relationship—and adds a touch of color to distinguish itself from its peers. As with the premises of most bestsellers, the result is both familiar and a little strange, telling us that we’re going to be told the story of a marriage, which is always somewhat interesting, but with a distinctive twist or backdrop. It’s perhaps for analogous reasons that the three most notable mainstream thrillers of the past decade are titled The Girl With the Dragon Tattoo, Gone Girl, and The Girl on the Train, the last of which is still awaiting its inevitable adaptation by David Fincher. Girl, in the context of suspense, has connotations of its own: when used to refer to an adult woman, it conveys the idea that she looks harmless, but she isn’t as innocent as she seems. (And I’m far from immune from this kind of thing: if there’s one noun that challenges Girl when it comes to its omnipresence in modern thriller titles, it’s Thief.)

When you look at the huge range of words available in any language, it can feel strange to reflect that the number of marketable titles often feels like a limited pool. Whenever I’m about to write a new story, I check Amazon and the Internet Science Fiction Database to make sure that the title hasn’t been used before, and in many cases, I’m out of luck. (Occasionally, as with “Cryptids,” I’ll grab a title that I’m surprised has yet to be claimed, and it always feels like snagging a prime domain name or Twitter handle.) Titles stick to the same handful of variations for a lot of reasons, sometimes to deliberately evoke or copy a predecessor, sometimes—as with erotica—to enable keyword searches, and sometimes because we’ve agreed as a culture that certain words are more evocative than others. As David Haglund of Slate once observed, everyone seems to think that tacking American onto the start of a title lends it a certain gravitas. For many movies, titles are designed to avoid certain implications, while inadvertently creating new clichés of their own. Studios love subtitles that allow them to avoid the numerals conventionally attached to a sequel or prequel, which is why we see so many franchise installments with a colon followed by Origins, Revolution, Resurrection, or Genesis, or sequels that offer us the Rise of, the Age of, the Dawn of, or the Revenge of something or other. I loved both Rise and Dawn of the Planet of the Apes, but I can’t help feeling that it’s a problem that their titles could easily have been interchanged.

Then, of course, there are the cases in which a title is simply reappropriated by another work: Twilight, The Host, Running Scared, Bad Company, Fair Game. This sort of thing can lead to its share of confusion, some of it amusing. Recently, while watching the Roger Ebert documentary Life Itself, my wife was puzzled by a sequence revolving around the movie Crash, only to belatedly realize that it was referring to the one by David Cronenberg, not Paul Haggis. From a legal perspective, there’s no particular reason why a title shouldn’t be reused: titles can’t be copyrighted, although you could be sued for unfair competition if enough money were on the line. Hence the inadvisability of publishing your own fantasy series called Game of Thrones or The Wheel of Time, although Wheel of Thrones is still ripe for the taking. (In Hollywood, disputes over titles are sometimes used as a bullying tactic, as in the otherwise inexplicable case of The Butler, which inspired Warner Bros. to file for arbitration because it had released a silent film of the same name in 1916. There’s also the odd but persistent rumor that Bruce Willis agreed to star in Live Free or Die Hard on condition that he be given the rights to the title Tears of the Sun—which raises the question if it was worth the trouble. Such cases, which are based on tenuous legal reasoning at best, always remind me of David Mamet’s line from State and Main, which knows as much as any film about how movie people think: “I don’t need a cause. I just need a lawyer.”)

Which just reminds us that titles are like any other part of the creative process, except more so. We feel that our choices have a talismanic quality, even if their true impact on success or failure is minimal, and it’s only magnified by the fact that we only have a handful of words with which to make an impression. No title alone can guarantee a blockbuster, but we persist in thinking that it can, which may be why we return consistently to the same words or phrases. But it’s worth keeping an observation by Jorge Luis Borges in mind:

Except for the always astonishing Book of the Thousand Nights and One Night (which the English, equally beautifully, called The Arabian Nights) I believe that it is safe to say that the most celebrated works of world literature have the worst titles. For example, it is difficult to conceive of a more opaque and visionless title than The Ingenious Knight Don Quixote of La Mancha, although one must grant that The Sorrows of Young Werther and Crime and Punishment are almost as dreadful.

And that’s as true today as it ever was—it’s hard to think of a less interesting title than Breaking Bad. Still, the search continues, and sometimes, it pays off. Years ago, a writer and his editor were struggling to come up with a title for a debut novel, considering and discarding such contenders as The Stillness in the Water, The Silence of the Deep, and Leviathan Rising. Ultimately, they came up with over a hundred possibilities, none of them any good, and it was only twenty minutes before they were set to go to the printer that the editor finally gave up and said: “Okay, we’ll call the thing Jaws.”

The Uncanny Birdman

Frankly, I don’t think anyone needs to read an entire blog post on how I felt about the Oscars. You can’t throw a stone—or an Emma Stone—today without hitting a handful of think pieces, of which the one by Dan Kois on Slate is typical: he hyperbolically, though not inaccurately, describes the win of Birdman over Boyhood as the ceremony’s greatest travesty in twenty years. So I’m not alone when I say that after an afternoon of doing my taxes, the four hours I spent watching last night’s telecast were only marginally more engaging. It wasn’t a debacle of Seth MacFarlane proportions, but it left me increasingly depressed, and not even the sight of Julie Andrews embracing Lady Gaga, which otherwise ought to feel like the apotheosis of our culture, could pull me out of my funk. It all felt like a long slog toward the sight of a movie I loved getting trounced by one I like less with every passing day. Yet I’m less interested in unpacking the reasons behind the snub than in trying to figure out why this loss stings more than usual, especially because indignation over the Best Picture winner is all but an annual tradition. The most deserving nominee rarely, if ever, wins; it’s much more surprising when it happens than when it doesn’t. So why did this year’s outcome leave me so unhappy?

I keep coming back to the idea of the uncanny valley. You probably know that Masahiro Mori, a Japanese roboticist, was the first to point out that as the appearance of an artificial creature grows more lifelike, our feelings toward it become steadily more positive—but when it becomes almost but not quite human, small differences and discrepancies start to outweigh any points of similarity, and our empathy for it falls off a cliff. It’s why we can easily anthropomorphize and love the Muppets, but we’re turned off by the dead eyes of the characters in The Polar Express, and find zombies the most loathsome of all. (Zombies, at least, are meant to be terrifying; cognitively, it’s more troubling when we’re asked to react warmly to a digital Frankenstein that just wants to give us a hug.) And there’s an analogous principle at work when it comes to art. A bad movie, or one that falls comfortably outside our preferences, can be ignored or even enjoyed on its own terms, but if it feels like a zombified version of something we should love, it repels us. If a movie like The King’s Speech wins Best Picture, I’m not entirely bothered by this: it looks more or less like the kind of film the Oscars like to honor, and I can regard it as a clunky but harmless machine, even if it wasn’t made for me. But Birdman is exactly the kind of movie I ought to love, but don’t, so its win feels strangely creepy, even as it represents a refreshingly unconventional choice.

The uncanny valley troubles us because it’s a parody of ourselves: we’re forced to see the human face as it might appear to another species, which makes us wonder if our own standards of beauty might be equally alienating if our perspectives were shifted a degree to one side. That’s true of movies, too; a film that hits all the right marks but leaves us cold forces us to question why, exactly, we like what we do. For me, the classic example has always been Fight Club. Like Birdman, it’s a movie of enormous technical facility—ingenious, great to look at, and stuffed with fine performances. To its credit, it has more real ideas in any ten minutes, however misguided, than Birdman has in its entirety. Yet I’ve always disliked it, precisely because it devotes so much craft to a story with a void at its center. It’s the ultimate instance of cleverness as an end in itself, estranging us from its characters, its material, and its muddled message with a thousand acts of meaningless virtuosity. And I push back against it with particular force because it’s exactly the kind of movie that someone like me, who wasn’t me, might call a masterpiece. (It may not be an accident that both Birdman and Fight Club benefit from the presence of Edward Norton, who, like Kevin Spacey, starts as a blank but fills out each role with countless fiendishly clever decisions. If you’re going to make a movie like this at all, he’s the actor you want in your corner.)

As a result, the Oscars turned into a contest, real or perceived, between Boyhood, which reflected the most moving and meaningful memories of my own life despite having little in common with it, and Birdman, which confronted me with a doppelgänger of my feelings as a moviegoer. It’s no wonder I reacted so strongly. Yet perhaps it isn’t all bad. Birdman at least represents the return of Michael Keaton, an actor we didn’t know how much we’d missed until he came roaring back into our lives. And if David Fincher could rebound from Fight Club to become one of the two or three best directors of his generation, the same might be true of Iñárritu—although it isn’t encouraging that he’s been so richly rewarded for indulging in all his worst tendencies. Still, as Iñárritu himself said in his acceptance speech, time is the real judge. The inevitable backlash to Birdman, which is already growing, should have the effect of gently restoring it to its proper place, while Boyhood’s stature will only increase. As I’ve discussed at length elsewhere, Birdman is an audacious experiment that never needs to be repeated, while we need so many more movies like Boyhood, not so much because of its production schedule as because of its genuine curiosity, warmth, and generosity towards real human beings. As Mark Harris puts it, so rightly, on Grantland: “Birdman, after all, is a movie about someone who hopes to create something as good as Boyhood.”

The Serial box

Note: This post contains spoilers—if that’s the right word—for the last episode of Serial.

Deep down, I suspect that we all knew that Serial would end this way. Back in October, Mike Pesca of Slate recorded a plea to Sarah Koenig: “Don’t let this wind up being a contemplation on the nature of truth.” In the end, that’s pretty much what it was, to the point where it came dangerously close to resembling its own devastatingly accurate parody on Funny or Die. There’s a moment in the final episode when Adnan Syed, speaking from prison, might as well have been reading from a cue card to offer Koenig a way out:

I think you should just go down the middle. I think you shouldn’t really take a side. I mean, it’s obviously not my decision, it’s yours, but if I was to be you, just go down the middle…I think in a way you could even go point for point and in a sense you leave it up to the audience to decide.

Koenig doesn’t go quite that far—she says that if she were a juror at Adnan’s trial, she’d have voted for acquittal—but she does throw up her hands a bit. Ultimately, we’re left more or less back where we started, with a flawed prosecution that raised questions that were never resolved and a young man who probably shouldn’t have been convicted by the case the state presented. And we knew this, or most of it, almost from the beginning.

I don’t want to be too hard on Koenig, especially because she was always open about the fact that Serial might never achieve the kind of resolution that so many listeners desperately wanted. And its conclusion—that the truth is rarely a matter of black or white, and that facts can lend themselves to multiple interpretations—isn’t wrong. My real complaint is that it isn’t particularly interesting or original. I’ve noted before that Errol Morris can do in two hours what Koenig has done in ten, and now that the season is over, I feel more than ever that it represents a lost opportunity. The decision to center the story on the murder investigation, which contributed so much to its early popularity, seems fatally flawed when its only purpose is to bring us back around to a meditation on truth that others have expressed more concisely. Serial could have been so many things: a picture of a community, a portrait of a group of teenagers linked by a common tragedy, an examination of the social forces and turns of fate that culminated in the death of Hae Min Lee. It really ended up being none of the above, and there have been moments in the back half when I felt like shaking Koenig by the shoulders, to use her own image, and telling her that she’s ignoring the real story as she leads us down a rabbit hole with no exit.

In some ways, I’m both overqualified to discuss this issue and a bad data point, since I’ve been interested in problems of overinterpretation, ambiguity, and information overload for a long time, to the point of having written an entire novel to exorcise some of my thoughts on the subject. The Icon Thief is about a lot of things, but it’s especially interested in the multiplicity of readings that can be imposed on a single set of facts, or a human life, and how apparently compelling conclusions can evaporate when seen from a different angle. Even at the time, I knew that this theme was far from new: in film, it goes at least as far back as Antonioni’s Blow-Up, and I consciously modeled the plot of my own novel after such predecessors as The X-Files and Foucault’s Pendulum. Serial isn’t a conspiracy narrative, but it presented the same volume of enigmatic detail. Its discussions of call logs and cell phone towers tended to go in circles, always promising to converge on some pivotal discrepancy but never quite reaching it, and the thread of the argument was easy to lose. The mood—an obsessive, doomed search for clarity where none might exist—is what stuck with listeners. But we’ve all been here before, and over time, Serial seemed increasingly less interested in exploring possibilities that would take it out of that cramped, familiar box.

And there’s one particular missed opportunity that was particularly stark in the finale: its failure to come to terms with the figure of Hae herself. Koenig notes that she struggled valiantly to get in touch with Hae’s family, and I don’t doubt that she did, but the materials were there for a more nuanced picture than we ever saw. Koenig had ample access to Adnan, for instance, who certainly knew Hae well, and there are times when we feel that she should have spent less time pressing him yet again for his whereabouts on the day of the murder, as she did up to the very end, and more time remembering the girl who disappeared. She also interviewed Don, Hae’s other boyfriend, whose account of how she taught him how to believe in himself provided some of the last episode’s most moving moments. And, incredibly, she had Hae’s own diary, up to the heartbreaking entry she left the day before she died. With all this and more at Koenig’s disposal, the decision to keep Hae in the shadows feels less like a necessity than a questionable judgment call. And I can’t help but wish that we had closed, at the very end, with five minutes about Hae. It wouldn’t have given us the answers we wanted, but it might have given us what we—and she—deserved.

The slow fade

William Weir has an excellent piece in today’s Slate about the decline of the fade-out in pop music, once ubiquitous, now nearly impossible to find. Of the top ten songs of 1985, every single one ended with a fade; over the last three years, there has been only one, “Blurred Lines,” which in itself is a conscious homage to a much earlier model. Weir points to various possible causes for the fade’s disappearance, from the impatience of radio and iTunes listeners to advances in technology that allow producers to easily splice in a cold ending, and he laments the loss of the technique, which at its best produces an impression that a song never ends, but imperceptibly embeds itself into the fabric of the world beyond. (He also notes that a fade-out, more prosaically, can be used to conceal a joke or hidden message. One of my favorites, which he doesn’t mention, occurs in “Always On My Mind” by the Pet Shop Boys, which undermines itself with a nearly inaudible aside at the very end: “Maybe I didn’t love you…”)

The slow fade is a special case of what I’ve elsewhere called the Layla effect, in which a song creates an impression of transcendence or an extension into the infinite by the juxtaposition of two unrelated parts—although one of the few songs on that list that doesn’t end with a fade, interestingly, is “Layla” itself. As Weir points out, a proper fade involves more than just turning down the volume knob: it’s a miniature movement in its own right, complete with its own beginning, middle, and end, and it produces a corresponding shift in the listener’s mental state. He cites a fascinating study by the Hanover University of Music in Germany, which measured how long students tapped along to the rhythm of the same song in two different versions. When the song was given a cold ending, subjects stopped tapping an average of 1.4 seconds before the song was over, but with a fade-out, they continued to tap 1.04 seconds after the song ended, as if the song had somehow managed to extend itself beyond its own physical limits. As the Pet Shop Boys say elsewhere on Introspective, the music plays forever.

In some ways, then, a fade-out is the musical equivalent of the denouement in fiction, and it’s easy to draw parallels to different narrative strategies. A cold ending is the equivalent of the kind of abrupt close we see in many of Shakespeare’s tragedies, which rarely go on for long after the demise of the central character. (This may be due in part to the logistics of theatrical production: a scene change so close to the end would only sow confusion, and in the meantime, the leading actor is doing his best to lie motionless on the stage.) The false fade, in which a song like “Helter Skelter” pretends to wind down before abruptly ramping up again, has its counterpart in the false denouement, which we see in so many thrillers, perhaps most memorably in Thomas Harris’s Red Dragon. And the endless slow fade, which needs a long song like “Hey Jude” or “Dry the Rain” to sustain it, is reminiscent of the extended denouements in epic novels from War and Peace to The Lord of the Rings. The events of the epic wrench both the protagonist and reader out of everyday life, and after a thousand crowded pages, it takes time to settle us back into Bag End.

The fade, in short, is a narrative tool like any other, complete with its own rules and tricks of the trade. Weir quotes the sound engineer Jeff Rothschild, who says that in order for the fade to sound natural to a listener’s ear, the volume must “go down a little quicker at first, and then it’s a longer fade”—which is a strategy often employed in fiction, in which an abrupt conclusion to the central conflict is followed by a more gradual withdrawal. There are times, of course, when a sudden ending is what you want: Robert Towne himself admits that the original dying close of Chinatown isn’t as effective as the “simple severing of the knot” that Roman Polanski imposed. But it’s a mistake to neglect a tool both so simple and so insinuating. (A fade-in, which allows the song to edge gradually into our circle of consciousness, can create an equally haunting impression, as in Beethoven’s Ninth Symphony and one of my favorite deep cuts by the Beatles, George Harrison’s “I Want to Tell You.”) These days, we have a way of seeing songs as discrete items on a playlist, but they often work best if they’re allowed to spill over a bit to either side. An ending draws a line in the world, but sometimes it’s nice if it’s a little blurred.

MFA, NYC, and the Classics

On Friday, Slate published a long article, which originally appeared in N+1, about the dueling literary cultures of New York City and the typical MFA graduate program. The article is a bit of a slog—the author, Chad Harbach, while clearly talented and smart, veers uncertainly between jargon words like “normative” and precious coinages like “unself-consciousness”—but it’s hard not to grant one of its underlying points: that it’s now possible for a writer to make a comfortable living, primarily as a teacher, by appealing to a tiny slice of academic readers, and that this approach is, if anything, easier, safer, and more of a sure thing than the writing of “commercial” fiction, even as it manages to portray itself as the more honest and authentic way of life.

Now, I don’t have an MFA. And I’m the author of what is intended, frankly, as a big mainstream novel. (Whether it succeeds or not is another matter entirely.) But I do know what Harbach means when he notes that MFA programs are becoming “increasingly preprofessorial”—that is, a credential on the way to a teaching job, rather than preparation for a life as a working writer. It’s a trajectory that seems very similar to that of the Classics, which is something I know about firsthand.

There was a time, or so some would like to believe, when a classical education was seen as an essential part of one’s training for the larger world: it was taken for granted that our lawyers, doctors, and politicians should know some Latin and Greek. These days, though, such departments seem primarily interested in training future professors of Classics. And, perhaps as a direct result, there are fewer and fewer Classics majors every year. (In my graduating class, there were something like fourteen, out of a student body of sixteen hundred.)

The same thing, I think, is likely to happen to MFA departments, if they continue along the track that Harbach describes. If author/professors continue to produce short stories exclusively for one another, interest in literary fiction will gradually die, no matter how many anthologies continue to “disseminate, perpetuate, and replenish” the canon. Like the Classics, university writing programs will remain a comfortable career option for a lucky few, but enrollment will inevitably decline, along with the sense of urgency and risk that all good fiction requires.

The major difference between an MFA and a Classics degree, of course, is that the former is much easier. That, too, is likely to change, especially if, as Harbach tentatively predicts, the MFA comes to focus more on the novel, rather than the short story, and continues to put its primary emphasis on literary theory. After all, with only so many tenured professorships available, there has to be some way to thin out the crowd. And if you don’t believe me, just look at the Classics. If you want to restrict a field to the professorial class, such an approach certainly works. It works only all too well.